Is VMware Clustering / VMotion Complex Compared to Microsoft Failover Clustering?

- Virtualization & Containers

- Published Apr 30, 2009

My last post on VMware VMotion urged several readers to protest, maybe because of its provocative title. What I did was to compare VMware clustering with Microsoft failover clustering. I got to the conclusion that both significantly add to the complexity of the environment. Interestingly, most commenters said, yes, Microsoft clustering is complex, but no, VMware clustering is not, yet failed to explain exactly why.

The complexity of clusters only partly lies in the software actually doing the clustering. What makes the stuff complex is the infrastructure you need for clustering. Let us compare Microsoft’s and VMware’s requirements:

Requirements for Microsoft Failover Clustering

Instead of defining hard requirements, Microsoft gives recommendations. In practice the following setup is often used:

- Shared storage on a SAN

- Dedicated private network for intra-cluster communication

- Similar hardware and software on all nodes

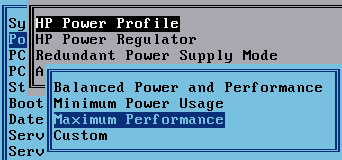

Requirements for VMware Clustering

In contrast to Microsoft, VMware very clearly defines hard requirements:

- Some kind of shared storage, typically on a SAN

- Dedicated private network for intra-cluster communication

- Compatible set of processors in all cluster nodes

It’s the Infrastructure!

Now, these requirements are strikingly similar. What should be noted, though, is that with VMware they are actual hard requirements, while with Microsoft they are only recommendations.

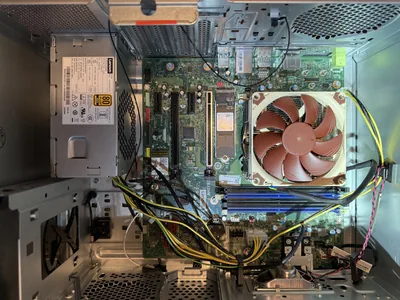

So, where does complexity come into play? With clusters, you simply have more components to worry about than with single servers. When administering a node, you always have to keep in mind that there are other nodes that should be configured similarly. Your network guys must setup and maintain an additional logical network. But most importantly, you need a SAN. Don’t tell me, SANs are simple, because they are not. And, since SANs really need to highly available, you need everything redundant: HBAs/NICs, switches, I/O controllers and so on. Just understanding a diagram of such a setup is way over the head of a large portion of the typical IT staff, let alone design and manage it.

Wrap-Up

As before, I want to point out that I am not against clusters in any way. But I happen to think that managing clusters along with all the required infrastructure is not a trivial job. In many cases, the benefits of a clustered solution will outweigh the trouble, but there may be situations where clustering is not justified.

Also, I try not to be biased. I have already written articles “pro VMware and con Microsoft”.

When comparing the complexity of VMware and Microsoft clustering I cannot find any fundamental difference. This is neither pro nor con VMware, but there seem to be a great deal of VMware evangelists around that take it as an affront if any of VMware’s products are considered less than miles ahead of the competition, especially Microsoft.

Of course, I am willing to learn. If you feel that I have misjudged the situation, please tell me exactly where. Do not write about how great or powerful VMotion is - I already know that - but explain why it requires a less complex infrastructure than Microsoft failover clustering.

Comments