Guide: WordPress on Dockerized Apache on Hetzner Cloud

- Website

- Published Oct 24, 2023 Updated Dec 7, 2025

If you’ve followed this blog for a while, you may have noticed that I’ve used a traditionally installed (i.e., not dockerized) LAMP stack for its server setup since 2014. Only recently did I switch to Docker containers. Why? Maintenance. Much facilitated maintenance. If you take a look at the articles I’ve written over the years describing how to upgrade to newer versions of Ubuntu or - god beware - PHP, you can’t help but realize what a godawful PITA it all is. Switching to Docker enforces (or at least strongly encourages) a strict separation of (public) code and (personal) configuration. With this new setup, upgrading from one PHP version to another involves nothing more than changing a version number in a text file.

During the development of this build, I made sure to only include sources/images that are highly likely to be available for decades (literally). I expect VMs built with the instructions below to be good for at least ten years, if not more (provided regular updates, of course).

Create a Hetzner Cloud Server

Create a New Project

In Hetzner’s Cloud Console, create a new project. Assign any meaningful name; it doesn’t really matter which; you can always change the name later.

Create an SSH Key (on Windows)

If you don’t have an SSH key yet, create a new SSH key with the following command (ssh-keygen has been part of Windows 10/11 since 2018):

ssh-keygen -t ed25519 -a 100

Specify a password when you’re asked to. The command creates two files:

id_ed25519: your private keyid_ed25519.pub: your public key

Store both files safely. The public key has your user and host names in the last column (format: user@host). That is a comment only; you can change the field’s contents if you want.

Create a New Server

Create a server as part of your new project. I selected Ubuntu 22.04 as the OS image because I’m familiar with it. As for the server type, you can start small (e.g., with a CPX21) and use the rescale feature to switch to a more powerful server later - after all, a cloud server is just a VM. For the record, it is possible to switch between shared vCPU and dedicated vCPU setups. The one thing you cannot do, however, is switch between CPU architectures (x86 ↔ Arm).

Add the public key of your SSH key for secure passwordless authentication. Give the new server a meaningful name.

Create a New Firewall

In the Cloud Console menu, select Firewalls > Create Firewall. Add the following inbound rules:

- SSH: YOUR IP, TCP, port 22

- ICMP: Any IPv4+IPv6, ICMP

- HTTP: Any IPv4+IPv6, TCP, port 80

- HTTPS: Any IPv4+IPv6, TCP, port 443

In the Apply to section, select your new server. Click Create Firewall.

Limiting SSH connections to your own IP address very effectively secures your server and does away with “illegal users” warnings in logwatch or similar tools. Of course, you may need to update the allowed IP address if your address changes.

Connect to the New Server

Fire up your favorite SSH client (I prefer Royal TS on Windows) and connect to your newly installed server using the following:

- Hostname: the server’s IPv4 or IPv6 address. Note that you may need to add a

1to the IPv6 address displayed in Cloud Console if that ends with two colons (::). - Username:

root - Key file: the private key you specified during server creation, along with its password.

Disable Password Authentication

Disable password authentication by editing /etc/ssh/sshd_config:

# Replace the default "yes" with "no"

PasswordAuthentication no

Reload the SSHD configuration:

service ssh reload

Disable Automatic Updates

Hetzner’s Ubuntu image comes with automatic updates enabled. I’m disabling it because the necessary reboots aren’t performed automatically, so I have to manually intervene anyway:

systemctl stop unattended-upgrades

apt remove unattended-upgrades update-manager-core update-notifier-common

apt autoremove

rm -r /var/lib/update-manager

Install & Configure Docker on the Hetzner Cloud Server

Install Docker

We’re installing Docker along with Docker Compose from the official Docker repository according to the docs:

Update:

apt update

Note: The packages ca-certificates curl gnupg don’t need to be installed because they already are.

Add Docker’s GPG key:

install -m 0755 -d /etc/apt/keyrings

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /etc/apt/keyrings/docker.gpg

chmod a+r /etc/apt/keyrings/docker.gpg

Add Docker’s stable repository:

echo "deb [arch="$(dpkg --print-architecture)" signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/ubuntu "$(. /etc/os-release && echo "$VERSION_CODENAME")" stable" | tee /etc/apt/sources.list.d/docker.list > /dev/null

Update and install Docker:

apt update

apt install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

Verify that Docker is installed correctly by running an image that prints a message and exits:

systemctl status docker

docker run --rm hello-world

Configure Docker

Log Rotation

By default, log rotation is disabled (docs). To enable log rotation, create a Docker config file /etc/docker/daemon.json with the following content:

{

"log-driver": "json-file",

"log-opts": {

"max-size": "10m",

"max-file": "3"

}

}

Restart the Docker daemon (service docker restart). To verify, run docker info.

Docker Data Directory

Create a Docker data directory:

mkdir -p /data/docker

Install & Configure Caddy for Automatic HTTPS Certificates

Why Caddy?

By using Caddy as a reverse proxy, we gain numerous benefits, including automagic TLS certificates and support for the HTTP/2 and HTTP/3 (QUIC) protocols, which are not trivial to implement in vanilla Apache.

Let’s Encrypt HTTP Challenge

This Caddy container setup is based on my earlier home server Caddy config, with one major difference: we don’t need the DNS challenge for Let’s Encrypt to verify that we actually own the domain for which we ask for HTTPS certificates; we can use the simpler HTTP challenge. After all, our web server is reachable from the public internet and, therefore, from Let’s Encrypt’s servers.

Preparation: Increase UDP Buffer Sizes for QUIC

The QUIC protocol (implemented by Caddy) requires larger buffers than are normally available in Linux (source). While we’re at it, we can also enable memory overcommit, which is required by Redis. Add the following to /etc/sysctl.conf:

net.core.rmem_max = 2500000

net.core.wmem_max = 2500000

vm.overcommit_memory = 1

Reboot and check the values with the following commands:

sysctl net.core.rmem_max

sysctl net.core.wmem_max

sysctl vm.overcommit_memory

Dockerized Caddy Directory Structure

This is what the directory structure will look like when we’re done:

/data/

└── docker/

└── caddy/

├── config/

├── data/

├── container-vars.env

├── Caddyfile

├── caddy_security.conf

└── docker-compose.yml

Create the new directories:

mkdir -p /data/docker/caddy/config

mkdir -p /data/docker/caddy/data

Caddy Docker Compose File

Create docker-compose.yml with the following content:

services:

caddy:

container_name: caddy

hostname: caddy

image: caddy:latest

restart: unless-stopped

ports:

- "80:80"

- "443:443"

- "443:443/udp"

networks:

- caddynet

env_file:

- container-vars.env

volumes:

- ./Caddyfile:/etc/caddy/Caddyfile:ro

- ./caddy_security.conf:/etc/caddy/caddy_security.conf:ro

- ./data:/data

- ./config:/config

whoami:

image: "containous/whoami"

container_name: "whoami"

hostname: "whoami"

networks:

- caddynet

networks:

caddynet:

attachable: true

driver: bridge

The whoami service is created strictly for testing purposes. You can remove it once things are working as expected.

Caddy container-vars.env File

Everything that is specific to your deployment goes into the container-vars.env file. This includes domain names, IP addresses, API keys, email addresses, and so on.

Create container-vars.env with the following content:

MY_DOMAIN_1=example.com # replace with your domain

Caddyfile

Create Caddy’s configuration file Caddyfile in the same directory as the .yml file and paste the following content:

whoami.{$MY_DOMAIN_1} {

reverse_proxy whoami:80

import caddy_security.conf

}

HTTP Header Security Configuration File

Create the file caddy_security.conf in the same directory as the .yml file and paste the following content:

header /* {

# Require HTTPS for subdomains, too

Strict-Transport-Security "max-age=31536000; includeSubDomains; preload"

# Disable MIME type sniffing

X-Content-Type-Options nosniff

# Don't allow embedding in other sites

X-Frame-Options sameorigin

# Only send the origin to other sites

Referrer-Policy strict-origin-when-cross-origin

# As strict as possible without breaking the site

Content-Security-Policy "default-src https:; font-src https: data:; img-src https: data: 'self' about:; script-src 'unsafe-inline' https: data:; style-src 'unsafe-inline' https:; connect-src https: data: 'self'"

# Disable powerful features we don't need

Permissions-Policy "geolocation=(), camera=(), microphone=() interest-cohort=()"

}

Once the server is up and running, verify your header configuration at securityheaders.com.

DNS A Record

Add the following A record to your DNS domain:

whoami.example.com 1.2.3.4 # replace with your server's public IPv4 address

Try to resolve the name on a machine in your network (e.g., nslookup whoami.example.com).

Start the Containers

Navigate into the directory with the .yml file and run:

docker compose up -d

Inspect the container logs for errors with the command docker compose logs --tail 30 --timestamps.

Test

Open https://whoami.example.com in your browser. It should display without certificate warnings or errors.

Install & Configure the LAMP Stack: Apache, PHP, MariaDB

Dockerized LAMP Directory Structure

This is what the directory structure will look like when we’re done:

/data/

└── docker/

└── lamp/

├── config/

├── apache-misc/

├── php/

└── vhosts/

├── data/

├── mariadb/

├── mariadb-init/

├── redis/

└── www/

├── dockerfile-apache/

├── Dockerfile

└── my-custom.conf

├── logs/

└── apache/

├── container-vars-mariadb.env

└── docker-compose.yml

Create the new directories. Ownership of the database files in data/mariadb is set to user/group ID 999 (UID/GID of the mysql user in the container) by MariaDB on its own. It is not necessary to run chown on the directory itself.

mkdir -p /data/docker/lamp/config/apache-misc

mkdir -p /data/docker/lamp/config/php

mkdir -p /data/docker/lamp/config/vhosts

mkdir -p /data/docker/lamp/data/mariadb

mkdir -p /data/docker/lamp/data/mariadb-init

mkdir -p /data/docker/lamp/data/redis

mkdir -p /data/docker/lamp/data/www

mkdir -p /data/docker/lamp/dockerfile-apache

mkdir -p /data/docker/lamp/logs/apache

LAMP Docker Compose File

Create docker-compose.yml with the following content:

services:

apache:

container_name: apache

hostname: apache

build: ./dockerfile-apache

restart: unless-stopped

depends_on:

- mariadb

- redis

networks:

backend: # backend communications to DB & Redis

caddy_caddynet: # frontend communications

expose:

- 80 # HTTPS is handled by Caddy

volumes:

- /etc/localtime:/etc/localtime:ro

- ./config/apache-misc/.htpasswd:/etc/apache2/.htpasswd:ro

- ./config/php/custom.ini:/usr/local/etc/php/conf.d/custom.ini:ro

- ./config/vhosts:/etc/apache2/sites-enabled:ro

- ./data/www:/var/www/html

- ./logs/apache:/var/log/apache2

mariadb:

container_name: mariadb

hostname: mariadb

image: mariadb:lts

restart: unless-stopped

networks:

backend: # backend communications only

expose:

- 3306

env_file:

- container-vars-mariadb.env

volumes:

- /etc/localtime:/etc/localtime:ro

- ./data/mariadb:/var/lib/mysql

- ./data/mariadb-init:/docker-entrypoint-initdb.d/:ro

redis:

container_name: redis

hostname: redis

image: redis:latest

restart: unless-stopped

networks:

backend: # backend communications only

expose:

- 6379

volumes:

- ./data/redis:/data

networks:

caddy_caddynet:

external: true

backend:

driver: bridge

Customized Apache & PHP Docker Image

We need a custom Apache & PHP Docker image in order to be able to install PHP extensions.

Create the file dockerfile-apache/Dockerfile with the following content:

FROM php:8.3-apache

# Silent install

ARG DEBIAN_FRONTEND=noninteractive

# Install ImageMagick (required by the imagick PHP extension)

RUN apt-get update && apt-get install -y \

libmagickwand-dev \

&& rm -rf /var/lib/apt/lists/*

# Use PHP's default production configuration

RUN mv "$PHP_INI_DIR/php.ini-production" "$PHP_INI_DIR/php.ini"

# Use the Docker PHP Extension Installer (https://github.com/mlocati/docker-php-extension-installer)

ADD https://github.com/mlocati/docker-php-extension-installer/releases/latest/download/install-php-extensions /usr/local/bin/

RUN chmod +x /usr/local/bin/install-php-extensions

# PHP extension installation

# See recommendations: https://make.wordpress.org/hosting/handbook/server-environment/

RUN install-php-extensions exif igbinary imagick intl mysqli opcache redis zip

# Enable Apache modules

# mod_rewrite: required by WordPress

# mod_remoteip: gets us the original client IP behind a reverse proxy with RemoteIPHeader

RUN a2enmod rewrite remoteip

# Disable logging to other-vhosts-access-log if no CustomLog is defined for a vhost

RUN a2disconf other-vhosts-access-log

# Set Apache's server name to get rid of the error message: Could not reliably determine the server's fully qualified domain name

RUN echo "ServerName localhost" >> /etc/apache2/apache2.conf

# Copy a custom configuration file

COPY my-custom.conf /etc/apache2/conf-enabled/

# Set UID & GID of the Apache user/group www-data to the default (33)

ARG UID=33

ARG GID=33

RUN usermod --uid $UID www-data && \

groupmod --gid $GID www-data

Note: if you modify Dockerfile later on, rebuild the image with the command docker compose build.

Custom Apache Configuration

We’re adding a configuration file in which we can overwrite some of the default Apache configuration. Create the file dockerfile-apache/my-custom.conf with the following content:

# Enable log rotation for the default error.log file

ErrorLog "|/usr/bin/rotatelogs -l -n 10 ${APACHE_LOG_DIR}/error.server.log 86400"

Custom PHP Configuration

As officially recommended, we’re using PHP’s production config file php.ini-production as the basis for our configuration (see Dockerfile).

We’re putting our customizations into a separate file in the regular INI’s conf.d subdirectory. Create config/php/custom.ini with the following content:

; Disable insecure/dangerous functions

disable_functions = exec,system,shell_exec,passthrough

; Opcache resources

opcache.memory_consumption=256 ; default: 128

opcache.interned_strings_buffer=16 ; default: 8

; Upload limit

upload_max_filesize = 50M ; default: 2 MB

post_max_size = 50M ; default: 8 MB

; PHP resources

max_execution_time = 120 ; default: 30

memory_limit = 256M ; default: 128 MB

; Stop PHP from sending the PHP version in the X-Powered-By HTTP header

expose_php = off

Apache Virtual Host Configuration

Create config/vhosts/example.com.conf with the following content:

<VirtualHost *:80>

ServerName example.com

ServerAlias www.example.com

DocumentRoot /var/www/html/example.com

DirectoryIndex index.php index.html

RemoteIPHeader X-Forwarded-For

RemoteIPInternalProxy 172.16.0.0/12

ErrorLog "|/usr/bin/rotatelogs -l -n 10 ${APACHE_LOG_DIR}/error.example.com.log 86400"

#CustomLog "|/usr/bin/rotatelogs -l -n 10 ${APACHE_LOG_DIR}/access.example.com.log 86400" combined

# Protect wp-admin (optional security hardening)

<Directory /var/www/example.com/public/wp-admin>

AuthType Basic

AuthName "Please log on"

AuthUserFile /etc/apache2/.htpasswd

Require valid-user

# Allow admin-ajax.php without auth for front-end functionality

<Files "admin-ajax.php">

Require all granted

</Files>

</Directory>

# Protect wp-login.php at site root (optional security hardening)

<Files /var/www/example.com/public/wp-login.php>

AuthType Basic

AuthName "Please log on"

AuthUserFile /etc/apache2/.htpasswd

Require valid-user

</Files>

# Regular public site

<Directory /var/www/html/example.com/>

AllowOverride All

Require all granted

Options -Indexes

</Directory>

</VirtualHost>

Don’t forget: we don’t have to deal with HTTPS at all. That’s taken care of by Caddy. And replace all occurrences of example.com with your own domain name, of course.

Note: The configuration above contains (optional) protection of your admin URLs with basic authentication as a first line of defense.

Note on Apache Log Rotation

Apache’s standard log rotation tool seems to be logrotate. In a regular (non-dockerized) setup, Logrotate is pretty easy to configure and works well. Things are different in a Docker container, though. logrotate relies on cron for scheduled execution, and cron is typically not enabled in Docker images. That’s why I turned to Apache’s rotatelogs instead.

MariaDB Configuration

Password Hashes

We’re specifying MariaDB passwords as hashes to avoid storing them as plaintext. Generate a root and a user password - unfortunately this has to be done in an existing instance of MariaDB or MySQL (see Shell Access in the MariaDB Container below) with the SQL query:

# This SQL command generates the (SHA1) password hash

select password('your password');

Store the plaintext passwords in a safe place.

Note: SHA1 is not secure anymore. While MariaDB offers ed25519 as an alternative, PHP (WordPress) doesn’t (source). We’re, therefore, stuck with SHA1, but that’s not as bad as it sounds because our MariaDB instance won’t be accessible from the internet.

MariaDB container-vars-mariadb.env File

Create container-vars-mariadb.env with the following content:

MARIADB_ROOT_PASSWORD_HASH=YOUR_ROOT_USER_PASSWORD_HASH

MARIADB_DATABASE=wordpress_examplecom

MARIADB_USER=wp_examplecom

MARIADB_PASSWORD_HASH=YOUR_WORDPRESS_USER_PASSWORD_HASH

MARIADB_AUTO_UPGRADE=true

With the above entries, if MariaDB doesn’t find a database upon startup, it creates the new DB wordpress_examplecom and grants all access to the user wp_examplecom, which it also creates.

Appending the domain name to the database name is useful if you plan to host multiple sites (with different WordPress instances) on your server.

Migrate WordPress

The following guide assumes that you already have WordPress running on another server and want to transfer it without modification, keeping the domain name.

Create a Full Backup on the Old Server

I’m using UpdraftPlus on my WordPress sites for daily backups. UpdraftPlus is configured to include all files in the backup: plugins, themes, uploads, the WordPress core, as well as additional directories such as a downloads folder. However, those various components of the site are placed in different archives. That’s why I only use UpdraftPlus’ database backup for the migration.

Database Backup

Create a new DB backup in UpdraftPlus. Download it from the old server to your machine.

File System Backup

To create a full backup of all files on your old server, SSH into the old machine and run:

# CD into your site's parent directory first

tar -czvf example.com.tar.gz public_html

Download example.com.tar.gz from the old server to your machine.

Apache .htpasswd

If you’ve protected WordPress’ admin login /wp-admin with basic authentication like I have, copy /etc/apache2/.htpasswd from the old server to your machine.

Transfer the Backup to the New Server

Connect to the new server with a tool such as WinSCP and upload the backup files as described below.

DB Dump

- Rename the extension of the archive file with the DB dump from

.gzto.sql.gz. - Upload that database dump archive to

data/mariadb-initon your new server.

Note: these instructions assume that your DB dump does not contain SQL instructions to create the database, too. The database is created by MariaDB based on the values of the environment variables (see above).

Files

Upload example.com.tar.gz to the web root of your new server: /data/docker/lamp/data/www/example.com.

CD into the directory with the .tar.gz file and extract the backup archive with the command:

tar --strip-components 1 -xvf example.com.tar.gz

Note: --strip-components 1 removes the topmost directory from the file paths in the archive, in my case, the unnecessary folder public_html.

Set the ownership of all website files and folders to www-data. Run the below on the Docker host:

chown -R www-data:www-data /data/docker/lamp/data/www/example.com

Upload .htpasswd to the directory /data/docker/lamp/config/apache-misc on the new server.

Adjust the WordPress Configuration

Edit wp-config.php:

- Update the database connection settings:

- Host:

define('DB_HOST', 'mariadb:3306'); - Database:

define('DB_NAME', 'wordpress_examplecom'); - User:

define('DB_USER', 'wp_examplecom'); - Password:

define('DB_PASSWORD', 'YOUR_WORDPRESS_USER_PLAINTEXT_PASSWORD');

- Host:

- Upgrade the keys and salts in the file with new values generated by WordPress’ API.

- Tell WordPress to use HTTPS for CSS & similar files even though HTTPS is terminated at the Caddy reverse proxy:

if ($_SERVER['HTTP_X_FORWARDED_PROTO'] == 'https') $_SERVER['HTTPS']='on'; - Tell WordPress to access the file system directly:

define('FS_METHOD','direct');

Start the LAMP Containers

Navigate into the directory with docker-compose.yml and run:

docker compose up -d

MariaDB Logs

Inspect the MariaDB logs for errors with the command docker compose logs --tail 100 --timestamps.

Apache Logs

Inspect Apache’s logs with the command tail -n 100 logs/apache/error.server.log.

Let’s Encrypt Certificate via Caddy

Caddyfile

Replace the whoami section in Caddyfile with the follwing:

www.{$MY_DOMAIN_1} {

redir https://{$MY_DOMAIN_1}{uri}

}

{$MY_DOMAIN_1} {

reverse_proxy apache:80

}

Caddy Behind Cloudflare: Solving Let’s Encrypt’s HTTP Challenge

If you’re using Cloudflare as a reverse proxy in front of your new server, Caddy cannot solve Let’s Encrypt’s initial HTTP challenge because HTTPS is terminated at the Cloudflare servers operating in reverse proxy mode.

To work around this issue, navigate to Cloudflare’s DNS Records dashboard and switch your site’s records from proxied to DNS only.

Once Caddy has acquired the initial certificate successfully, you can switch back. Renewals work fine behind Cloudflare (source).

DNS A & AAAA Records

Modify the existing A and AAAA records (IPv4 and IPv6, respectively) so that they point to your new server’s IP address.

Make sure that your DNS domain name resolves to the new IP address on your machine before proceeding, e.g., by running nslookup example.com.

Reload Caddy’s Configuration

Instruct Caddy to reload its configuration by running:

docker exec -w /etc/caddy caddy caddy reload

Inspect the container logs for errors with the command docker compose logs --tail 30 --timestamps. You should see a message similar to the following:

,"logger":"tls.obtain","msg":"certificate obtained successfully","identifier":"www.example.com"}

You should now be able to access your site at https://example.com.

Caddy Behind Cloudflare Error: Too Many Redirects

If your browser cannot connect to the new site, stating the error “too many redirects”, navigate to SSL/TLS > Overview in Cloudflare’s dashboard and switch the SSL/TLS encryption mode from flexible to full /strict.

Caddy Behind Cloudflare: Switch Proxied Mode Back On

If you switched Cloudflare from proxied to DNS only above, switch it back to the original proxied mode.

Post-Migration Work

W3 Total Cache Settings

As part of this migration, I switched from Memcached to Redis and, therefore, had to replace the Memcached hostname with the Redis host and port in the settings of the W3 Total Cache plugin: redis:6379.

Check WordPress Plugin Settings

Check your WordPress plugins for issues in the admin UI. Some plugins may have stored absolute file system paths that may not be valid on the new server anymore. In my case, that happened with the UpdraftPlus backup plugin, where I had configured a custom downloads directory outside of the regular WordPress file system structure.

Clean Up

- Delete the database dump file in

data/mariadb-init. - Delete the backup archive you uploaded.

Optional Steps

Install & Configure Postfix with Relay via SendGrid

The following steps show how to enable the server to send emails securely through a local installation of Postfix, relaying through the SendGrid service.

Note: Hetzner (rightfully) blocks outgoing SMTP communications for new accounts. Relaying to another server via port 587 is allowed, though.

Set a FQDN

Configure a fully-qualified host name:

hostnamectl set-hostname www.example.com

Install Postfix

apt install postfix libsasl2-modules

When asked, choose “no configuration”.

Configure Postfix as a Relay for SendGrid

Create /etc/postfix/main.cf:

smtpd_relay_restrictions = permit_mynetworks, reject

smtp_sasl_auth_enable = yes

smtp_sasl_password_maps = hash:/etc/postfix/sasl_passwd

smtp_sasl_security_options = noanonymous

smtp_sasl_tls_security_options = noanonymous

smtp_tls_security_level = encrypt

header_size_limit = 4096000

# Forward everything to this host

relayhost = [smtp.sendgrid.net]:587

# Allowed SMTP source addresses

mynetworks = /etc/postfix/mynetworks

# Strip subdomains of example.com

masquerade_domains = example.com

# Mapping table for "from" addresses

smtp_generic_maps = hash:/etc/postfix/generic

# Postfix version compatibility

compatibility_level = 3.6

Create /etc/postfix/mynetworks which contains the allowlist of source addresses (we’re allowing localhost only):

127.0.0.1/8

[::1]/128

Create the credentials file /etc/postfix/sasl_passwd:

[smtp.sendgrid.net]:587 apikey:YOUR_API_KEY

Apply permissions and update postfix hashtables:

chmod 600 /etc/postfix/sasl_passwd

postmap /etc/postfix/sasl_passwd

Create the from address mapping file /etc/postfix/generic with the following content:

root@example.com noreply@example.com

Run postmap on the mapping file:

postmap /etc/postfix/generic

Enable and start Postfix:

systemctl enable postfix

systemctl restart postfix

Send a Test Email

Connect via Telnet to Postfix:

telnet localhost 25

Paste the following (after adjusting the email addresses, of course):

helo admin

mail from: address@domain.com

rcpt to: address@domain.com

DATA

Subject: This is a test

From: address@domain.com

To: address@domain.com

Some text

End the DATA section as instructed by typing Enter followed by . and Enter.

You should see a message similar to: 250 2.0.0 Ok: queued as 7C0794769C.

End the session with Ctrl+]. Quit Telnet with q.

Troubleshoot Postfix

If something doesn’t work as expected, check Postfix’s log file /var/log/mail.log.

Custom MariaDB Configuration File

MariaDB’s Docker image uses the Ubuntu MariaDB variables with two changes that disable the authentication of user@hostname users (source).

Custom configuration files should only contain the actual changes from the default in a [mariadb] stanza, e.g.:

[mariadb]

setting = value

Install logwatch

logwatch monitors your server’s log files and sends you email reports at configurable intervals.

Install logwatch:

apt install logwatch

Change the report frequency from daily to weekly:

mv /etc/cron.daily/00logwatch /etc/cron.weekly/

Edit /etc/cron.weekly/00logwatch, changing the logwatch call so that you are emailed instead of root, HTML is used instead of text, and the date range processed is one week instead of a day:

# execute

/usr/sbin/logwatch --mailto address@example.com --format html --range '-7 days'

Before making changes to the default configuration for SSHD, copy it so that it’s not overwritten during updates:

cp /usr/share/logwatch/default.conf/services/sshd.conf /etc/logwatch/conf/services/

Modify the copied configuration file /etc/logwatch/conf/services/sshd.conf as follows:

# Ignore "illegal users" below a threshold (optional)

# $illegal_users_threshold = 4

# Disable IP lookups to speed up logwatch's processing and to prevent the classification of logwatch's emails as spam

$sshd_ip_lookup = No

Manual test run:

/usr/sbin/logwatch --mailto address@example.com --format html

We’re testing without the 7-day range parameter because, apparently, logwatch doesn’t do anything at all if it doesn’t have seven days worth of logs yet. Unfortunately, logwatch’s documentation is basically non-existent and what does exist is hard to find.

Make sure the email arrives as it should. If it doesn’t, check the Postfix log (see above).

Server Backup with restic

See my related post for an encrypted offsite backup solution with ransomware protection.

Migrate a Second WordPress Site

To migrate a second site to the new server, perform the steps as described above in the section Migrate WordPress, with one exception: we need to create the MariaDB database for the second site manually. As there already is a database, MariaDB won’t run init scripts anymore.

Apache

Apache Virtual Host Configuration

Create config/vhosts/example2.com.conf with the following content:

<VirtualHost *:80>

ServerName example2.com

ServerAlias www.example2.com

DocumentRoot /var/www/html/example2.com

DirectoryIndex index.php index.html

RemoteIPHeader X-Forwarded-For

RemoteIPInternalProxy 172.16.0.0/12

ErrorLog "|/usr/bin/rotatelogs -l -n 10 ${APACHE_LOG_DIR}/error.example2.com.log 86400"

#CustomLog "|/usr/bin/rotatelogs -l -n 10 ${APACHE_LOG_DIR}/access.example2.com.log 86400" combined

# Protect wp-admin (optional security hardening)

<Directory /var/www/example2.com/public/wp-admin>

AuthType Basic

AuthName "Please log on"

AuthUserFile /etc/apache2/.htpasswd

Require valid-user

# Allow admin-ajax.php without auth for front-end functionality

<Files "admin-ajax.php">

Require all granted

</Files>

</Directory>

# Protect wp-login.php at site root (optional security hardening)

<Files /var/www/example2.com/public/wp-login.php>

AuthType Basic

AuthName "Please log on"

AuthUserFile /etc/apache2/.htpasswd

Require valid-user

</Files>

# Regular public site

<Directory /var/www/html/example2.com/>

AllowOverride All

Require all granted

Options -Indexes

</Directory>

</VirtualHost>

Database

Upload the DB Dump

Upload the extracted database dump file to data/mariadb-init on your new server.

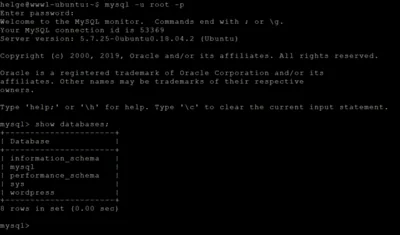

Shell Access in the MariaDB Container

Run the following to get an interactive Bash shell in the MariaDB container:

docker exec -it mariadb bash

Once inside the container, you can interact with the database after authenticating:

mysql -u root -p

Create the WordPress Database Manually

Run the following commands at the MariaDB prompt to create the database for WordPress:

create database wordpress_examplecom;

grant all privileges on wordpress_examplecom.* TO "wp_examplecom"@"%" identified by "PASSWORD";

flush privileges;

Import the DB Dump

Run the following commands at the MariaDB prompt to import the DB dump from the old server:

use wordpress_examplecom;

source /docker-entrypoint-initdb.d/DUMPFILENAME;

exit

exit

Delete the DB dump file.

Caddy

Caddy Configuration for the Second Site

Add the following to Caddy’s container-vars.env file:

MY_DOMAIN_2=example2.com # replace with your domain

Caddyfile

Add the following section to Caddyfile:

www.{$MY_DOMAIN_2} {

redir https://{$MY_DOMAIN_2}{uri}

}

{$MY_DOMAIN_2} {

reverse_proxy apache:80

}

DNS A & AAAA Records

Modify the existing A and AAAA records (IPv4 and IPv6, respectively) so that they point to your new server’s IP address.

Make sure that your DNS domain name resolves to the new IP address on your machine before proceeding, e.g., by running nslookup example2.com.

Reload Caddy’s Configuration

Instruct Caddy to reload its configuration by running:

docker exec -w /etc/caddy caddy caddy reload

Inspect the container logs for errors with the command docker compose logs --tail 30 --timestamps. You should see a message similar to the following:

,"logger":"tls.obtain","msg":"certificate obtained successfully","identifier":"www.example2.com"}

You should now be able to access your site at https://example2.com.

Operations & Maintenance

Upgrading Linux OS & Docker Images

Upgrading the Linux OS

Run the following commands to upgrade Linux, remove obsolete binaries, and restart the system:

apt update

apt dist-upgrade

apt autoremove

shutdown -r now

Upgrading the Caddy Container

Run the following commands to upgrade the Caddy container:

cd /data/docker/caddy/

docker compose pull

docker compose up -d

Upgrading the LAMP (Apache) Container

Run the following commands to upgrade the LAMP container:

cd /data/docker/lamp/

docker compose build --pull

docker compose up -d

After the upgrade, I’d advise to check the web server log for errors:

tail -n 100 logs/apache/error.server.log

Removing Obsolete Docker Images

Run the following commands to clean up and removed Docker images that are not used anymore after the container upgrades:

docker system prune -a

Adding Basic Authentication Users

Follow these steps if you’ve protected WordPress’ admin login /wp-admin with basic authentication and you need to add another user:

# Install htpasswd

apt install apache2-utils

# Add an entry for USERNAME to .htpasswd

htpasswd /data/docker/lamp/config/apache-misc/.htpasswd USERNAME

Creating Users With Restricted SFTP Access (Chroot Jail) & Public Key Authentication

The following instructions show how to create a user support with access to only what is explicitly mounted in that user’s directory.

SSHD Configuration

Edit the file /etc/ssh/sshd_config.

Replace the line:

Subsystem sftp /usr/lib/openssh/sftp-server

with the line:

Subsystem sftp internal-sftp

Add the following at the end of the file:

Match Group restricted_sftp

ChrootDirectory /var/sftp/%u

AllowTCPForwarding no

X11Forwarding no

ForceCommand internal-sftp

PasswordAuthentication no

User, Group, and Permissions

Run the following commands:

# Create a group for all restricted users

groupadd restricted_sftp

# Create a new user without a shell

useradd support -g restricted_sftp -d /var/sftp/support/ -s /bin/false

# Set a password for the new user

passwd support

# Create the directory we mount to in the next step

mkdir -p /var/sftp/support/mount

# Create the user's SSH configuration and give them access to it

mkdir -p /var/sftp/support/.ssh

touch /var/sftp/support/.ssh/authorized_keys

chown support:restricted_sftp /var/sftp/support/.ssh

chown support:restricted_sftp /var/sftp/support/.ssh/authorized_keys

chmod 700 /var/sftp/support/.ssh

chmod 600 /var/sftp/support/.ssh/authorized_keys

# Add the user's public key to /var/sftp/support/.ssh/authorized_keys

# This should be one line that looks similar to: ssh-ed25519 AAAA... USERNAME

# Add mount information to /etc/fstab (use your favorite editor)

MOUNT_TARGET_PATH /var/sftp/support/mount none bind 0 0

# Mount everything in fstab

mount -a

# Reload the SSH service

service ssh reload

Please note that all directories in the path /var/sftp/support need to be user/group owned by root.

Changelog

2025-12-07

- Added (optional) hardening of the WordPress admin URLs via basic authentication in the Apache vhost configuration.

2025-09-28

- Replaced PHP 8.2 with 8.3 in

Dockerfile.

2024-06-12

- Optimized package installation with

apt-getinDockerfile.

2024-04-14

- Removed the

versionfromdocker-compose.yml; a warning mentions that it’s obsolete.

2024-02-15

- Added the section Adding Basic Authentication Users.

- Added the section Creating Users With Restricted SFTP Access (Chroot Jail) & Public Key Authentication.

2024-01-20

- Added the section Upgrading Linux OS & Docker Images.

2023-11-18

- Added the section Disable Automatic Updates.

Comments