Persistent VDI in the Real World – Sizing

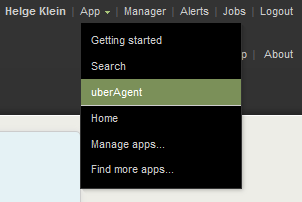

- Citrix/Terminal Services/Remote Desktop Services

- Published Jan 7, 2014 Updated Mar 29, 2014

This is the third article in a multi-part series about building and maintaining an inexpensive scalable platform for VDI in enterprise environments.

Previously in this Series

In the last article I explained that shared storage should be avoided, for two reasons: it is (very) expensive, and you, the VDI guy, are not in control. The storage guys are.

With local storage, things are different. Unfortunately we cannot use SSDs, though, because the server vendor component monopoly means we only have overpriced drives of questionable origin available. Instead we use traditional spinning drives, as fast ones and as many as we can get, in a RAID 10 configuration, complemented by a deduplication and IO optimization product like Atlantis Ilio.

Virtual Hardware

Before we can start with the sizing calculations we need to know what to size for - in other words: what kind of hardware are the virtual desktops are going to get?

Our requirements state that a VM’s performance should at least be on par with a three year old desktop. Translated to virtual hardware the minimal configuration looks like this:

- 4 GB RAM

- 2 vCPUs

- 100 GB HDD

When reading about VDI you sometimes find numbers that are much smaller, e.g. only 2 GB RAM and 1 vCPU. Do not do that! Remember, we are talking about a full desktop replacement for knowledge workers. If you need to argue the case for more than one vCPU: do you remember what a single-core PC felt like when the virus scanner got working?

by [Angelo DeSantis](http://www.flickr.com/photos/angeloangelo/) under [CC](http://creativecommons.org/licenses/by/2.0/) A380, 747-8 FIGHT! by Angelo DeSantis under CC](/images/2014/01/generated/A380-747-8-FIGHT_400w.91b733e64b6c6adfaa6b570e8db44d372bfc015264844de45fc83249291ff757.webp)

Total Capacity

With the virtual machine hardware defined, it is time to calculate the total required capacity. For that we need the number of virtual desktops we are going to provide. For the sake of simplicity let us calculate with 1,000 VDI machines. That gives us a total required capacity of:

- RAM: 4,000 GB

- vCPUs: 2,000

- Disk: 98 TB

Please note that this is the total capacity as seen from the virtual machines. Requirements for physical hardware are much lower because we are overcommitting CPUs and deduplicating disk space.

We do not overcommit RAM. Memory is cheap, just buy enough and avoid the severe performance degradation that occurs when pages need to be swapped out to disk.

Building Blocks

We are going to build our VDI infrastructure from self-contained building blocks without any central components that might impact scalability. This way, we can add capacity whenever we need it by simply buying another server and placing it next to the others. It is also a very simple approach (the good simple - simplicity means stability).

Selecting a Server Model

Selecting a server model can be hard, so let our requirements act as guidance:

- The customer’s preferred supplier is HP

- Cost is an issue

That narrows it down a bit. We need a mid-range (i.e. 2 CPU) HP server, a model that is mass produced in such high volume that the costs can be kept reasonably low.

Our storage architecture and capacity calculations indicate we need a lot of RAM and many disks. That rules out blades and 1U systems.

An additional constraint I have not talked about yet: we want Intel CPUs. AMD is (unfortunately) far behind these days, both in terms of performance per dollar as well as in performance per Watt.

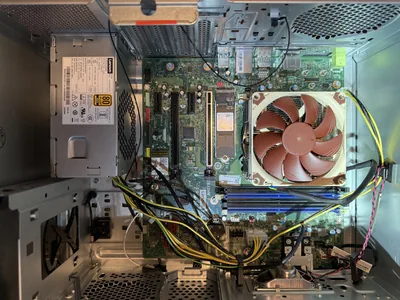

The logical choice is the DL380 G8. Although only a 2U machine it can hold 25 2.5" hard disks and 768 GB RAM. And it is pretty decently priced.

Choosing CPU, RAM and Disks

Now we need to define how we want to configure the building blocks (i.e. servers) for our VDI infrastructure.

CPU

When thinking about which CPU to order keep in mind that even though Xeon CPUs are powerful, they are not faster per core than desktop CPUs. Even the most expensive 12 core server CPU can replace at most six desktop CPUs. In other words: we need as much CPU power as we can get, both in terms of speed and number of cores.

One of the currently best-suited CPUs for VDI is the Xeon E5-2690 v2. With its 10 cores at 3.0 GHz it is among the fastest available. The Xeon E5-2697 v2 could be an interesting alternative because of its 12 cores, but it only runs at 2.7 GHz and costs nearly $600 more (list price $2,618 vs. 2,061).

RAM

As the DL380 has 24 DIMM slots one might gain the impression that more or less any amount of RAM can be configured. In reality it is more like the opposite, though: only very few configurations make sense.

The DIMM slots are organized in channels. Each CPU has four memory channels (2 CPUs -> 8 channels). For optimal performance each channel should be configured identically. That means we can only use DIMMs of identical size, and we need to configure either 8, 16 or 24 DIMMs per server. With 16 GB DIMMs we get total RAM sizes of 128, 256 or 384 GB.

We are aiming at running 30-40 VMs per server. With 4 GB per VM we need a net capacity of 160 GB per server. Configuring the servers with 256 GB seems like the reasonable thing to do. It leaves enough room for Atlantis Ilio and optional RAM upgrades for select VMs.

Disks

With deduplication disk space requirements are mainly dependent on the amount of user data stored locally in each VM. In enterprise networks, where most data is kept on file servers, this number can be fairly low. On average, 20 GB per VM should suffice. Rounding up a little a net capacity of 1 TB should do nicely. With the disks in a RAID 10 configuration a gross capacity of 2 TB is required.

We want to have as many spindles as possible, so we choose 146 GB drives, which are the smallest 15K drives available. 16 of those give us a total capacity of roughly 2 TB, just what we need. As with the RAM there is room for future expansion, which can be important if it turns out that we need more space or more spindles (= more IOPS) than anticipated.

How Many Servers?

Out of the three essential resources CPU, RAM and disk in our architecture only one is not overcommitted: RAM. That means the amount of available RAM defines the maximum capacity in terms of VMs per server.

With 256 GB per server and a (generous) reservation of 64 GB for Ilio and 12 GB for VMware we are left with 180 GB for the VMs. That amounts to a max capacity of 45 VMs per server.

In practice we want to stay below that value. For one thing we want to be able upgrade a VM’s memory upon (power) user request - after all, being able to easily adjust a machine’s specs is one of the big advantages of VDI. For another thing 45 VMs per server would result in a CPU overcommitment of 4.5:1 with the E5-2690 v2 CPU. That is on the upper end of the spectrum. Reducing the CPU overcommit ratio to 3:1 seems adequate for knowledge workers. It allows us to run 30 VMs per server. All in all we need 34 servers to host 1,000 VMs.

What About IOPS?

Did you notice that we hardly touched the IOPS topic at all? That is not because I forgot it or think it is of low importance (quite the contrary!). Instead it is due to the theoretical nature of this sizing discussion. There are several ways to size an environment and this is only one of them (for another approach see this article series). In any case it is critical you verify your assumptions and test the platform thoroughly prior to rollout. That is probably the most important step of all.

From my experience I can say that this platform performs well enough. Obviously you cannot expect SSD performance from spinning disks, not even with Ilio. If you want that you need to spend significantly more money.

Comments