Windows x64 Part 3: CPUs, AMD64, Intel 64, EM64T, Itanium

- 64-Bit Windows (X64)

- Published Feb 5, 2008 Updated Mar 13, 2021

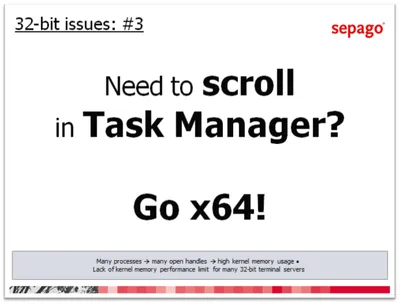

This is the third part of a mini-series on Windows x64, focusing on behind the scene changes in the operating system. In the first two articles (here and here) I explained key concepts and limitations of the x86 platform: every 32-bit process can use 2 GB of address space, which is by far enough for most applications. However, the kernel is also limited to 2 GB of RAM, which can lead to bottlenecks on systems that need to keep track of large amounts of resources, which is typically the case on terminal servers.

With this article I will move the focus away from the 32-bit architecture, which now seems so “small” to us, to concentrate on 64-bit. Let us start with the hardware.

64 != 64

Today many of us take it for granted that they can just grab any computer from the shelf and install Windows x64 on it. But only a few years ago things were very different. Laptops with 64-bit CPUs? No way. Intel pushing x64 to build a future for their best-selling 32-bit platform? Come on. Intel was quite happy with the x86 market and its share of it. They knew, of course, that the x86 architecture would reach its limits sometime in the future. And boy did they prepare for it. Thinking that a complete restart was necessary, they threw x86 overboard and partnered with HP to jointly shoulder the costs of building a completely new architecture from scratch. This proved not only costly but also very difficult. The resulting IA-64 architecture surely has its merits, but grave are the shortcomings. The Itanium chips appeared later than announced, were expensive, and, gravest of all, could not run x86 software. Not with acceptable speed, that is. Although Microsoft felt obliged to provide a special IA-64 version of Windows (and still does, for the time being), what use is an operating system without applications? The Itanium never stood a real chance on the mass market and was quickly satirized as “Itanic”.

AMD was cleverer. They had to be, lacking Intel’s huge development budget. So instead of coming up with a completely new 64-bit architecture of their own they cleverly extended what they had and added 64-bit support into their x86 CPUs, christening the result AMD64. To Intel, this may have seemed laughable at first. But they quickly got over that. Grudgingly the market leader adopted the concept, adding 64-bit extensions to their Pentium 4 and Xeon processors in 2004. After initially calling these extensions EM64T, they probably realized AMD had the better marketing name and moved to “Intel 64”. Since we now have two compatible extensions both called something 64, the term x64 fits both of them well.

By now, these market adjustments are history. AMD’s bold move forced Intel to react. And they reacted well, switching over to the “core” architecture with built-in “Intel 64”. If you order a computer today, no matter whether it is a laptop, desktop, or server from AMD or Intel, it is well capable of executing both legacy x86 and modern x64 code.

64 == ?

I should probably talk a bit about how x64 differs from x86. On the surface, not very much. Mainly, the width of the processor registers was expanded from 32 to 64 bits, although currently available CPUs can “only” work with up to 48-bit addresses (amounting to a maximum of 256 TB of RAM). This may seem like an unnecessary restriction, but today it is simply not necessary to carry the additional overhead of even larger addresses, and the upper 16 bits can probably be unlocked easily in future CPUs. Microsoft is pragmatic, too, limiting the address width in current versions of Windows to 44 bits, making 16 TB of RAM addressable.

This moves the limits up quite a bit. While I think that we will eventually reach the borders imposed by the new architecture, 8 TB of address space both for the kernel and for each individual process should be enough for the time being. The maximum size of the critical kernel memory areas paged pool, non-paged pool and system PTEs is 128 GB each on x64.

With these numbers in mind, you might ask why not move to the 64-bit architecture immediately. Indeed, why have you not installed every system in your data center with Windows x64? There are many reasons for that, but a very important one is that you need more RAM. Why? x64 applications use 64-bit addresses which take up twice as much room in RAM as 32-bit addresses. Simply put, x64 programs have a larger memory footprint than x86 programs. Exactly how much more RAM is needed on x64 to provide the same performance as on x86 is a highly controversial topic with estimates ranging from 20 to 70%. Fact is, simply reinstalling your x86 terminal servers with Windows x64 will lessen the number of users you can put on a single machine.

With that, I reach the conclusion for this article. Although the x64 architecture is much more scalable than its predecessor x86, this comes at a price: RAM. You always knew there could never be enough of it. Well, with x64 this is truer than ever.

In the next articles in this series I will explain other more subtle differences between x64 and x86 that nonetheless need to be thoroughly considered before moving to the new architecture.

Comments