How to Build a State of the Art Backup System for Your Personal Data

Like most people, I have been accumulating personal data over the decades. While some of it is not too important, other pieces are priceless, especially my pictures and videos. Losing those files would be a nightmare. That kept me searching for the ideal backup mechanism for several years. Although I would not dare say I have found the perfect solution, I have learned a lot in the process and can give solid recommendations. Hence this article.

What Are We Talking About?

I currently have roughly 200 GB of private data spread over something like 180,000 files. That is a lot, especially the file count. I will come back to this later.

Requirements

Which requirements must a backup solution meet? What must it be capable of? What are key points to consider? The following section lists the more important topics in no particular order since none of them are much less important than the others:

- It must be simple to use. If creating backups is difficult, human nature makes sure that it will not happen and everything else is useless.

- It should be inexpensive. After all, we are talking about personal use here, not company-financed. If it is expensive, people will not even buy it, let alone use it.

- Easy access to old versions of files and folders. One of the more common scenarios where a backup is really needed is the case of accidentally ruining a document you have spent weeks working on. You then want one thing only: the last working version back.

- It must be fast. If it takes the software hours just to analyze what has changed since the last run, people will get frustrated and not use the software. Do you think this is an easy one? Remember I have something in the area of 180,000 files. Many solutions out there were not designed with such large numbers in mind. They just take too long to scan the file system (or do whatever else it is they do) in preparation of a backup run.

- It must be reliable. As a user, I want to be 100% sure that the software stores my valuable data exactly the way it finds it on disk. And everything, regardless of file system ACLs, mount points, hard links, attributes, encryption, compression, or what other obstacles one might throw at it.

- It must be unobtrusive and have no impact on regular computer usage. I have heard of programs that sit in the background all the time and aggressively eat away at the system’s performance. Such solutions tend to be uninstalled pretty quickly and then we are back to square one.

- Migration of your data between computers and operating systems must be easily possible. If you upgrade or replace your computer, the data backed up must be easily associated with the new system. This is especially important for online backup services which tend to tie the data to a specific computer.

- Off-site storage: In case of a desaster (burglary, fire, water, …) it does not help if your backup media were located in your home right next to your computer. Both will be gone. If you are paranoid enough to allow for the possibility of such events, you need to store backups of your data off-site.

And then there is the restore side of things. This part is often overlooked until it is too late. An old tip, but probably the most important piece of advice you will find: always test the restore procedure in a real-world scenario involving all your data. Even the most elaborate backup systems often lack essential, easily overlooked functionality that makes restores difficult, slow, incomplete or even impossible. Here is a list of what I think is important:

- The data backed up should be stored in an open format that can be read without having to install some restore software first. According to Murphy’s Law, when it comes to the worst and I need to restore my data, the restore software is either not available for my platform, or the vendor has gone out of business so there is no place I can download it from, or, or, or…

- Like the backup procedure, restore must be fast and reliable. “Fast” meaning that it must not take weeks but mere hours to restore everything, and “reliable” referring to data integrity and consistency. You want everything back just the way it was. Not different in any way.

- Restore must be flexible. I want to be able to only restore certain file types, directories or files changed since a certain date. And I might need to restore to a different platform, different drive letter or different file system. In short: Every conceivable option should be available.

Wow, that is quite a list I compiled. This goes to show how complex the issue really is. Few people actually like talking and thinking about backup and restore. It sounds dull and boring. But in reality it is a hot and challenging topic.

Quick and Dirty Assessment of Popular Backup Systems

- Backup to tape: Are you kidding? Way too expensive for home users.

- Backup to DVDs: You are still kidding, right? Just how many disks do you want to juggle? Remember the amount of data I am concerned with.

- RAID: RAID levels greater than 0 offer protection from hard disk failure, nothing more. They do not provide access to older versions of a file, the most important functionality of backup systems. And they do not provide off-site storage of your data.

- Disk images: Right system, wrong use case. Disk images are well-suited for entire OS partitions, but are a poor choice for large amounts of data.

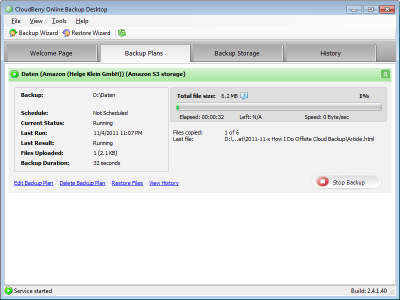

- Online backup: Sounds intriguing at first, to me, too. Read about some of my experiences here. Some downsides: backup takes forever on typical ADSL lines. Restore is not exactly fast, either. And do you permanently want to clog your internet connection?

- “Traditional” backup software: Sounds like the obvious choice. But data is often stored in proprietary formats (bad). Many programs have horrendous design flaws that manifest themselves only when it is too late (Murphy, again). Most if not all programs are not free (limits adoption: just ask around your friends if they are willing to spend money on a backup software).

- Manual copy to external hard drive: Way too error-prone. Cannot be automated.

- Rsync backup to external drive: Intriguing system. The only flaws I found: rsnyc does not support paths longer than MAX_PATH (260 characters) and thus potentially cannot back up all your data (this is not only theoretical: I actually encountered this problem). And it is not too fast: the script makes your hard disk churn for a long time before actually starting to back up things.

My Recommendation

Now that I have made sure that no backup system in existence can meet my needs and dissed every solution available out there, it is time I give some recommendations on how to do the stuff.

With Windows Vista, one of the most important new features of Windows Server 2003 has found its way into desktop operating systems: shadow copies. The OS can (and will) automatically take so-called snapshots of your computer’s partitions at defined intervals. A snapshot freezes the file system at a certain point in time, conserving every file just the way it was then. The cool part about this is that this happens at the block level: snapshots themselves do not actually consume disk space, except for meta (management) data. Only when you change a file that has been included in a snapshot before, get the blocks that are changed to be stored in a different location. This essentially means that with today’s disk sizes snapshots are “for free”. Great stuff.

Snapshotting technology is included in Vista Ultimate, Business and Enterprise. If you do not have one of those, consider upgrading just for the sake for this feature. Then enable snapshots for your data partitions (by default Vista enables it only for the system partition). To do that run “sysdm.cpl”, click on the tab “Computer Protection” (or so I think – I use a German installation) and enable snapshots for all data partitions. Do not be confused by the fact that snapshots are called “System Restore” and “Restore Points”.

Enabling snapshots gives you the ability to “travel back in time”, what Apple appropriately called “Time Machine”. Next, you need to mirror all your data regularly to an external USB drive. Robocopy is the tool of choice for this step. Either create your own simple batch script for this task, or use mine (see below).

Important: Only connect the external drive for backups. No not (!) keep it connected to your computer all the time. Although this is a little inconvenient, it saves power (and thus money), reduces wear out of the external drive and prevents accidental deletions and virus/malware infections.

Now only one thing is missing: off-site storage. For that, use an old hard drive to be thrown away or buy a new one, just as you like. Use the robocopy script to copy all your data to the “old” drive and deposit it in a trusted location. That might be a relative’s home, your employer, a friend, whatever. Repeat the procedure every few months or years, depending on your level of paranoidity.

You’re done. You have not a perfect backup system, but a very good one that is simple to use.

Backup Script

@echo off

:: By Helge Klein

set SRCDRIVE=D

set DSTDRIVE=F

:: Folders to copy, comma-separated

set FOLDERS=Folder1,Folder2

set ROBOOPTIONS=/mir /r:0 /tee /np /log+:backup.log

for /d %%i in (%FOLDERS%) do @robocopy %ROBOOPTIONS% "%SRCDRIVE%:\%%i" "%DSTDRIVE%:\%%i"

6 Comments

Can this been done for Win XP users or jsut restricted to the 3 versions of Vista you mentioned? Am familiar with System restore, but don’t think it can extend to data as well for XP.

Good article though.

Matt,

Shadow copies are only partially implemented in Windows XP, meaning they are volatile and do not survive reboots. That makes them unsuitable for the purpose outlined in the article.

Further information can be found here: http://blogs.msdn.com/adioltean/archive/2006/09/18/761515.aspx

Interesting article Helge. And something that I went through a long time ago myself. I found I didn’t have quite as much data to deal with (I only had around 50-100GB), but it was still an issue. Also, I’m not currently dealing with the videos because that gets a little crazy on the storage front. What I ended up doing was going with iDrive (www.idrive.com). They allow up to 150GB of storage for $5USD/month. Data is encrypted in transfer and stored encrypted on disk. I can retrieve previous versions from web browser interface from anywhere in the world and it’s completely offsite. It also doesn’t require me to remember to place a hard drive at someone elses homes, etc. That was key for me because if it was left to me remembering to do something, it wouldn’t get done. I have iDrive setup on a scheduled basis to run nightly at 2am. I could have used the continuous backup protection model, but I didn’t want it stealing bandwidth at unexpected times and when I first got started they didn’t offer the Continuous method.

Good luck with your strategy. A co-worker of mine a few years ago accidentally formatted his laptop that contained all the photos from his families Disney vacation. He lost everything. I thought his wife was going to kill him. LOL

Shawn

Shawn,

I was using mozy until a few days ago, when I realized (http://it-from-inside.blogspot.com/2009/02/mozy-computer-upgrade-data-migration.html) that mozy ties the uploaded data to a specific installation. After upgrading the OS and hard drive the backup client did not recognize that a) my data is still the same and b) mozy already has it all. The client tried to re-upload everything, would literally took several months the first time. That kind of put me off.

Another thing that got me thinking: restoring large amounts of data soleley from a source on the web is going to be a slow and painful process. In case my hard drive really crashes, I want to have a full copy nearby.

I agree that online backup can complement other solutions well, for it provides off-site storage and a file history.

Pretty good analysis.

We use such system for our server backup :

Robocopy of files to another server in another building (Disaster recovery).

Raid 1 for system disk and Raid 5 for data disks.

Backup from a datacenter, from an internal backup server with Legato Networker and VSS for intraday backups or fast recovery.

Perhaps a little thing missing, that saves our lives several times :

an image of the system partition saved on another server in another building through ghost or acronis trueimage (i think ghost is free).

Really useful in case of crash disk, missing os file, bad patch installed, fast recovery needed (print server for 1000 users).

Hopes it helps.

Cheers,

Stéphan

Stéphan,

In the article, I focused on backup of data, not installations (OS/programs). For that reason I did not mention the benefits of OS images. But you are right, of course, they can be very useful.