Windows 8 Storage Spaces: Bugs and Design Flaws

When I first read about Storage Spaces in the Windows 8 blog, I was enthusiastic. Finally a replacement for the drive extender technology Microsoft let die so cruelly. Having used Storage Spaces for several weeks now, I am not so sure any more.

What For?

In this article I focus on the media server use case. More and more people need a fool-proof and affordable way to store multiple terabytes of pictures, music, videos and similar content. Traditional storage solutions (including NAS devices) simply do not cut it. They are either too difficult to use, do not provide good data protection or are too expensive. Or all of the above.

Storage Spaces

This is how I summarized the capabilities of Storage Spaces after the initial announcement:

Multiple internal or external drives can be combined to a disk pool out of which one or more thinly-provisioned Storage Spaces can be carved. Spaces are fault tolerant (using either parity or mirroring) and may consist of disks of different types and sizes (minimum one, no fixed maximum). Similar to the deprecated Windows Home Server Drive Extender Spaces can be expanded by adding additional disks. Failed drives can be replaced while a disk pool is online. Enclosures with a disk pool can be connected to different Windows 8 computers and may be used there if more than half of the disks are available.

That sounds really good. Here is the reality check.

Architecture

A Storage Space operates at the block level while it should work at the file level instead. The benefit of potentially high read speeds (chunks from larger files are stored on different drives and can be read concurrently) is not relevant in SOHO scenarios. But the increased probability of error is.

By splitting files into chunks (called “slabs”) and striping these across disks, it becomes:

- impossible to access a single disk’s data without all other disks being accessible

(you cannot take a disk from the pool and read its data in another computer) - impossible to add existing disks to the pool without losing the data stored on them

(when adding a disk to the pool, it is initialized and formatted – importing data into the pool by adding a new disk does not work) - impossible to convert between different redundancy types (none, parity, mirror)

- much more likely that the entire system breaks because failure of a single drive destroys all data on all disks (two drives with parity or one-way mirror)

(in file-based solutions, in case of disk failure only the data on the failed disk is lost, the remainder of the pool remains unaffected)

Implementation

In its current state, Storage Spaces suffer from several bugs that severely impact their usefulness:

- There is no way to change the redundancy mode of existing Storage Spaces without reinitializing the Spaces, deleting all data.

- Only hard disks of identical size can efficiently be combined into a storage pool, because: (maximum size) = (size of smallest disk) * (number of disks)

If you have two 3 TB disks and add a 1 TB disk, you get: 3 TB. 4 TB out of the total capacity of 7 TB are not used. This is discussed

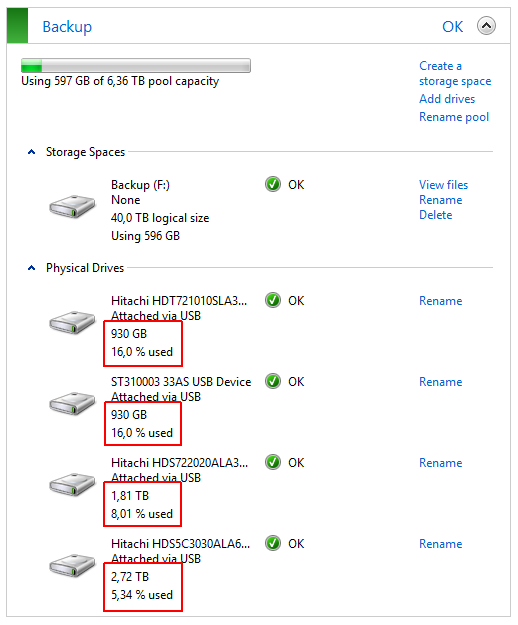

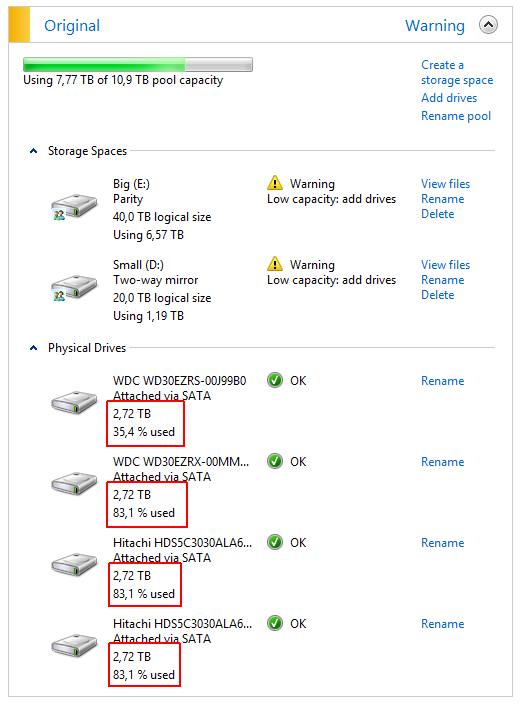

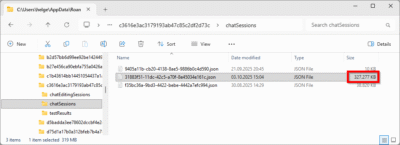

here and the following screenshot from my own system clearly shows the unequal utilization of the disks:

- When adding a new drive to a pool, existing data is not rebalanced. Existing disks stay the way they are (nearly full), while the new drive hardly stores any data:

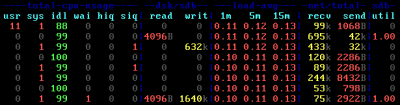

- When copying data to a Storage Space until it is full, the copy operation runs into some kind of loop with the kernel consuming near 50% CPU. A restart is required to stop that.

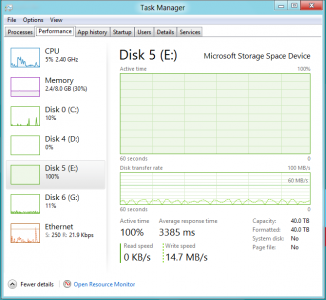

- Low write performance, especially to Storage Spaces in parity mode, but also to Spaces in two-way mirror mode. I have written about that before.

Alternatives

The demise of Drive Extender inspired several developers to create their own variant of a flexible, inexpensive and fool-proof storage pool solution. These are the ones I have found and read good things about:

- DriveBender

Creates a storage pool of multiple drives. Drives can be added to the pool without losing the data stored on them. Existing data is rebalanced so that files are distributed evenly. Drives that have been removed from the pool can be used on any Windows machine without losing data. Optionally files in some or all folders can be duplicated. Does not offer parity as a redundancy mode.

Does not currently work on Windows 8 CP. - StableBit DrivePool

Surprisingly similar to DriveBender but only for Windows Home Server 2011, Windows Small Business Server 2011 Essentials and Windows Storage Server 2008 R2 Essentials. - FlexRAID

More options than DriveBender and DrivePool, but also much more complex. Apparently no trial version available. - SnapRAID

Free command-line copy/backup tool that stores files across multiple drives. Optionally, 1 or 2 drives can be designated for parity (this requires the largest drives in the pool to be used for parity, though).

Conclusion

While I hope that Microsoft will fix the implementation issues present in the consumer preview version of Windows 8, I do not think that they will change the architecture in any substantial way. That is really unfortunate – the chance to provide a fool-proof way of managing the terabytes of data found in modern homes has been lost. Luckily several very interesting third-party products are available.

41 Comments

I think I found another bugin storage spaces. I have a sans digital 8 bay 3.0 usb enclosure with eight 3tb internal drives. When I set-up storage spaces with the eight internal drives, no parity, 50 tb logical volume size, it seems to configure properly in the storage spaces gui.

However, when I try to copy files to the storage space volume using windows explorer, after 20-30 gb, the copy “hangs” and doesn’t work.

Strangely though, when I configure storage spaces initially with four 3tb drives, and thereafter add two more drives, and again another two more drives for a total of eight 3 tb drives, I am able to copy files without any errors.

Any suggestions or solutions?

Regards,

Cary

What you experience is not a bug. You have 8 x 3 TB, so it is only natural that the copy operation stops at 24 TB. The 50 TB you configured ia a virtual (theoretical maximum) size only, to be reached only when adding more disks.

Ok when windows uses a NAS it should not time out the network conection but it dose, and will do this even if you copy or are moving have to find the service or set a comand line to fix it still have not found it in windows 8, but see stuff for XP and windows 7. if you find anything on this it would be usefull thanks.

Helge:

It is a bug. I have 24 tb of available storage, but I can’t copy more than 20-30 gigabytes (gb) into the storage pool when I initially setup the pool using eight 3tb drives. When I try again, and initially setup the pool using four 3 tb drives, then add two more 3 tb drives, and thereafter again add another two 3tb drives for a total of eight 3tb drives, windows explorer copy works fine.

Oh, I mistook GB for TB.

Wow, great review. You totally reset my expectations of upgrading my HTPC to Windows 8 for DriveSpaces. While I still plan on moving my TB’s of data to be directly connected to my HTPC. (instead of on a WHS box) I predict I’ll be using either FlexRaid or DriveBender on Windows 7 (and Windows 8 at some point) I wish StableBit DrivePool had a regular Windows version. (non-WHS) I’m familiar with DriveBender testing in the past, but I’ve yet to try FlexRaid. I’m going to tinker with it soon and choose between Flex or Bender.

JazJon I am in nearly an identical situation to you. Leaning towards merging WHS + htpc box and just running win 7/8 with software pooling. Flexraid seems best option atm.

Ok, I think I’m almost sold on picking/trying FlexRaid!

I’ve been using WHS 2011 & easy to use StableBit DrivePool for the past 8 months with two 5 bay eSata enclosures. My NEW migration plan is to hook the same two 5 bay eSata enclosures directly to my powerful Windows 7 Media Center HTPC. (and not use WHS for my main pool of videos/music/pictures any more) I want it all directly on my HTPC for various performance and compatibility reasons. WHS was fun, but not really needed any more with 3rd party pooling being proven effective over the last year.

Since StableBit DrivePool is only supported on WHS, I thought I was going to be forced to use DriveBender. (which has a clunky UI to me from past experiments) I’m sure DriveBender would get the job done, but I’m not feeling confident in testing it over FlexRaid now. Well I’m glad I did some research first and discovered FlexRaid. I’m really impressed with what I’ve seen reading over the Flex Wiki pages. I’ve learned a lot, and I’m ready to start with a Trial.

FlexRaid is only at 5% in this poll

http://www.wegotserved.com/2012/04/11/storage-management-windows-home-server-2011/

(click on FlexRaid!)

FR seems to be off to a great start post 2.0. I’m feeling very confident that FlexRaid will meet my expectations, needs, and then some.

I guess the only hesitation is FlexRaid seems to be the new kid on the block, so I’ve yet to see as many reviews out there. (nothing on youtube for example even) I have a feeling that post 2.0 will bring in more people like me if it’s as good as I’m thinking it will be. Thoughts on FlexRaid?

QUESTION:

When I start my test, I’ll be using a different eSata controller than when I’m ready for production. Will my FlexRaid pool have any problems if the drives get moved around? I know it didn’t matter with StableBit DrivePool. It knew what was what based on the long hidden poolpart folders per drive. Is FlexRaid just as Flexible with moving drives around between reboots etc?

@ Jazjon

I take it you have noticed Alex’s announcement that drive pool is being ported to vanilla windows rather than just the server products.

Dave

Yes, was going to mention the same thing. Alex is eyeing it for Vista on up, probably 64-bit only. See http://forum.covecube.com/discussion/654/drivepool-1-1-and-beyond

And thanks Helge, it’s nice to have this clear update/summary of the state of pooling for Windows. :)

Hi,

I would like to get a few opinions from those of you who have dealt with larger storage systems, at the SOHO level (10+ TB, 10+ drives).

I am a software developer and am knowledgeable with respect to most hardware out there, but I am not a storage geek, and I don’t really know any, so that is why I am reaching out for some suggestions.

I used WHS with DriveExtender until MS threw in the towel. I left WHS (ticked) and went to Win7 after playing with FreeNAS v7/v8 and ZFS. ZFS is a great idea, FreeNAS is a great idea, but in my experience, FreeNAS broke more stuff than it fixed with each v8 beta release (testing was poor), I gave up, as it ate up too much time, too many basic items would remain broken. I would have a hard time going back to it unless I heard some compelling stories from those with truly large data stores on v8+ with ZFS. I am not a fan of the usability of Linux – I must devote most of my time to work, not playing for months to get stuff working.

Here is my current storage setup, which works well, takes little time, but I think I need progress forward and break free from its current limits and what I feel will become a time drain.

Main server at home: Old Core2 Quad, 8G, SSD boot, Win7 Pro, 3Ware 9690SA8i, Chenbro SAS Expander as well (I get these storage items at good prices where I work), 800W Antec PSU, dual Intel aggregated NICs, CAT6 plumbed through the house (Netgear Smart switches), to HTPC and BRPC, etc., and it all works fine – though Win7 Pro does limit any one connection to 1GB – ?. The 3Ware controllers (I have a few) are some of the best pieces of hardware I have ever purchased; I love when stuff just works!

Main case is Antec Twelve, with the 5-bay racks (Addonics, now under 20 other names), so I get 4×5=20 (+2 other = 22) drives in the case (I have not wanted a more-expensive loud rack-based setup). So in that one case I have 11SG(7200rpm) and 11WD(5400) 2TB drives, in 2 arrays, @RAID5, meaning I get 10x2TB usable storage in each array, 18TB. It has been running fine for 2 years, no real issues. A drive goes bad, I RMA it. Heat is not an issue and it is not too loud.

I crudely use RoboCopy to copy from Array1 two Array2, just because I am data paranoid (I have been around and seen it all).

Before you ask….I do all of my TV and Movies off this storage, I don’t do optical disks or real-time TV viewing – I thought I would explain, before you ask why all the storage, everyone does ask. ? I would toss all this and go with an online media service (I have 24mbps uncapped service), but those services are all hype, unless you only want to view just a few popular tv shows and movies – I have tried most all of the services out there. I would gladly pay for a usable service, but in my opinion it does not exist, yet.

I have a 3rd 18TB RAID5 array (mix of drives in an old case) hanging off the server (via Chenbro 36-port SAS expander), usually powered off, I backup to that at times. I also have a 2nd server with 18TB RAID5, that is usually off. This gives me plenty chances against Murphy when comes at my data. My important data (business, code, photos, scans) is shipped offsite, in case really bad things happen.

Generally this system works well, but with 2TB starting to seem old (for me) and less cost-effective than 3TB+ drives, I do not want to purchase more same-size 2TB drives in the future. This is quite the dilemma with hardware RAID, for a home system (this is more-affordable than it would seem), but I worry about the time to maintain this down the road, as drives start to fail. BTW, Soft/Fake RAID consumed so much time, and worked poorly, just say no to FakeRAID.

I am generally familiar (but not experienced) with the available storage concepts out there and had even written some tools for WHS DE back when, so I know what’s usually involved, but since settling on hardware RAID, after WHS DE, I have had no real experience with these others storage solutions.

So, Win8 Storage Spaces comes along, it “sounds” great, and if implemented correctly (MS at times has issues with implementation of stuff) life would be good. Double or triple mirroring, just what my data paranoid mind wants (I will still keep a separate server, always).

But is this new MS stuff really going to work (now or soon)? The parity variety suffers from the smallest drive issue, so I would skip that and use their mirroring. Will I really pull out my 2TB drives, one at a time, and replace them as needed, with 3TB+ devices, and be happy, and still have time to sleep?

I am starting to wonder with some of what I read. Looking for advice from some of you who have tinkered with Storage Spaces with a large number of disks and space. If this is a no go, in the foreseeable future, what would really work well for me, FlexRAID, etc.?

Thanks for any suggestions.

I have a setup very close to yours. I’m using an ASROCK x79 extreme9 motherboard, which gives me 16 sata ports, and using 2 El Cheapo 5port sata multipliers hanging off 2 marvell controllers on the motherboard, for a grand total of 24 sata ports available. I’m using 10x WD 2TB green drives (still get +120MB steady from those), with 3x EARX (sata 3) and 7x EARS (sata 2) variety. They are basically the same, and no mechanical drive can saturate a sata2 link anyway. I have them as single drives, no raid on them. I hate the performance loss and storage space i lose when doing any of the useful raids, like raid-5.

I also have 2×1.5TB seagates, 3x1TB Hitachi’s and 4x500GB mixed WD, Seagate and Hitachi drives, plus an ssd for system.

This adds up to 28TB of storage, on an Antec case as well, with the 3×5.25=5×3.5 cases (el cheapo brand, 15 USD each).

I use snapraid for security and protection of the data, and has saved my rear end a couple of times, when drives died (they do that. Murphy is a SOB that will, and i mean WILL, come knocking. Snapraid is fantastic for the type of data i store (big movie files, tv shows, etc) that are just stored and don’t change much after adding. Simply set it to run at night using task scheduler and its fire-and-forget. If a drive dies it will refuse to run, so it won’t kill the protection by accident.

I did try the storage spaces on Windows Server 2012 rtm (i have volume licensing at work, so i could get an early start on it), but the performance is attrocious. Using similar 4x2TB disks, parity, i was getting write speeds of under 20MB/s. That was a deal breaker for me, even before i realized adding odd sized disks to the pool would waste space. I have tried flexraid in the past and liked it, but since it got payed instead of free-as-in-beer, i went to a different approach for pooling. I now use a somewhat convoluted solution, but that works very well for me. I have a virtual machine running on the server, running linux, where i map the shares for all the drives of storage i have through cifs, then i use something called greyhole to create a pool in linux, which i then share (again) back to the windows box. This works incredibly well performance wise (120+ MB/s sustained, which is more than enough for what i need). I also get the added benefit of running Plex under linux which is more stable (doesn’t die like the windows version).

But the short answer is, no, the windows solution with the storage spaces / pools is not ready for primetime, at least for another iteration or two.

fK

@Steve,

FlexRaid offers a fully functional 14 day trial that you can install on one of your backup/offline boxes to try.

It will not interfere with your data in any way, leaving it stored exactly as is in standard NTFS filesystem on Windows.

You can test pooling your drives and use just one drive ( or more if you wish ) to write to, to test the snapshot raid feature.

I use 20x2TB drives and it works just fine for me with pooling and snapshot raid.

I’ve run into a major problem with storage spaces. My storage space is full. Having a full storage space puts it into an error state, and it goes offline. You can click “Bring online” but it immediately goes offline again.

So, I can’t free space on it, because I can’t get it online to delete stuff. And, more importantly, I can’t get anything off of it because it won’t stay online.

It seems my only option is to add three drives, as I had it set to parity. The only problem? I don’t have three more drives to add.

So, if your storage space goes offline because it is near capacity, DO NOT bring it online and attempt copy/save more files to it. You will be left in a state where your only option to get it to work again is to add one or more drives.

I’m ready to switch back to StableBit DrivePool as soon as Alex releases a vanilla Windows version.(I’ll even do Beta right out the gate) I didn’t have good luck with FlexRaid’s pooling so I used DriveBender for now. I’m still using FlexRaid’s SnapShot raid though and left file duplication turned off. (to save space) This duo seems to work great, but I’d still feel better using DrivePool long term. I’m ready to swithch my HTPC to Windows 8 soon, but will continue using 3rd party Pooling apps since DriveSpaces seems to suck and not be very scale-able/flexible.

Ran into the same “out of space” issue that causes the drive to be taken offline – bringing it back online just caused to to immediately go offline and I couldn’t access the files. Fortunately I had a USB drive I was able to empty and added it as a drive, then brought the pool online, deleted some stuff and physically detached the USB drive, and reformatted it on another computer to get it back to a usable state.

You’d think it would be possible to bring online as read-only or something similar when it’s full.

I’m going to reinstall Windows 7 on this computer and look into one of the other options mentioned above – Storage Spaces was the only real feature I was trying Win 8 for and I hate the new start screen garbage.

One questions about Storage Spaces.

What if the system HDD failed and replaced with a new HDD and fresh OS,

Will Storage Spaces setup from previous OS remain? Or be gone?

Windows 8 recognizes existing Storage Spaces on a disk connected to the computer. That means, that you can take an external drive enclosure with a Storage Space on it an connect it to different computers and work with the data.

Thanks to all of you for your comments. This is exactly the type of info I have been looking for. Here is what I have seen with Server 2012 and Storage Spaces.

I just started playing with Server 2012 Essentials (MSDN) and am starting to slowly not like what I see with Storage Spaces. I cannot say anything conclusive, yet, and I hope I am wrong, but I am getting a bad feeling so far. My first parity SS setup was terribly slow. About to start playing with a mirrored setup. We’ll see. I am still trying to understand the difference between Storage Pool and Storage Space. Maybe I am missing something, maybe not.

Is it true that SS does not allow mixing of drive sizes? I thought this was to be tackled. While not trivial, it is solvable. Maybe I am wrong and am missing something with the Storage Pool vs. Storage Space, and virtual-ness here. Maybe I am expecting too much. [I loved playing with ZFS, FreeBSD, not so much]

I was impressed that Server 2012 with Windows Update drivers did bring my 11×2 R5 3Ware array to life. I am playing with my 11 other drives with Storage Spaces.

Because I did not RTFM for Server 2012, I did not expect to hit a wall so quickly, my fault. There’s a 160G minimum HD size for a Server 2012 Essentials installation. Of course you only find that out when you are in the final config stages of the setup. Why not at least warn of this when I pointed to my raw 90G SSD drive in the early setup stages? Lots of fun. It was late in the evening and I wanted to see this install work. So I tried using an old spinning 500G drive for the install. Created a 100G partition (not 160) and it installed just fine. Not sure why MS wants to have a 160G minimum drive size (room to grow?). It seems arbitrary so far, but maybe there is more to it.

So I created a Storage Pool/Space and played. It generally worked. And I can say that with my next install (new 240G SSD, see below), after several reboots, my previously created 11-drive Storage Space WAS seen by my new OS instance. It did not appear at first, and I was ticked, but 2-3 reboots later, after Windows Update, and it magically appeared. Only a seasoned OS, I would imagine it would appear instantly.

I needed to do another install (or move the image from the 500G spinning drive). So on my day off, I run back to work and buy a Samsung 240, to get me out of the bind. All will be fine, right? Nice new 240G SSD, what can go wrong with the Server 2012 install now?

Well, I found a bug in the Server 2012 Essentials setup (not sure about the other versions) that won’t allow me to install to a Samsung 240G (raw or creating and formatting a partition) when wired to an Intel ICH10 onboard controller. More work. I pulled the nice new SSD, formatted it as NTFS on another machine, reinstalled 2012 pointing to that pre-formatted NTFS Primary partition during install, and all was fine. Only 4-6 hours lost. But that is an improvement from 4-6 days in the early NT4 days. So I guess I should look at the bright side here!

I am going to put Storage Spaces through a workout here. I am not hopeful. I have a feeling I know what I will say when the space fills up and the only solution add another drive, while kissing my data goodbye in the mean time. I hope am wrong and instead all works fine.

So for now, 2 copies of my data is sitting off to the side.

I have my eye on FlexRAID in the background. I may start playing with that as well.

FWIW, I also installed Windows 8 Pro on my X220T Thinkpad, from USB flash, and that install was flawless. I was quite impressed. My first touch of the screen worked fine. No further testing yet.

I will report back my future findings with Storage Spaces, good or bad.

Thanks for all of the input, it really helps.

OK so the final RTM code is out now, and I’m revamping my home setup, so I thought I’d give Storage Spaces a whirl as it beats waiting for an Intel RST RAID set to initialise.

So, I’m currently testing two systems side by side, one is running Windows Server 2012 Essentials, on a C206 chipset board, with a quad-core i5, 8Gb and 6 x 1Tb 5400RPM drives in a Storage Spaces pool. The other is running Windows Storage Server 2012 on a dual core Atom, with 8Gb and 4 x 2Tb 5400RPM drives using Intel motherboard RAID.

Using CrystalDiskMark, the Atom is getting sequential reads of 220MB/s and writes of 140MB/s

Given the massive disparity in CPU power, more drives and the reputed poor performance of Intel RST, one would hope that Storage Spaces would give it a good run for its money….

drum roll…..

The mighty i5 used its processing muscle to power away and score…

127MB/s read and a whopping 29 MB/s write.

That’s beyond poor. Yes its convenient and flexible, but if that convenience comes at the cost of an 4 -5 fold drop in performance, what’s the point. Its difficult to know how MS could have made the performance SO bad.

OK, so the Intel RST RAID 5 has just finished initialising on the server I tried Storage Spaces on, so for a direct comparison on identical hardware and software…

Storage Spaces : 127MB/s read and 29MB/s write

Intel RST RAID : 401MB/s read and 208MS/s write.

Now unless Storage Spaces also needs a lengthy initialisation process before its actually ready to use that isn’t mentioned in its management GUI, then that’s quite a gulf in performance, and quite a price to pay for the ease of use and flexibility

Hello,

I stumbled across this blog from reading about huge 40TB home servers running SAS cards and UnRAID, but was keen to know more about Win Storage spaces – so it’s good to know it’s limitations

From what I’ve read about Flexraid, it appears to be better for not being destructive to the whole array

Have you used it? Can you comment on it’s ability to use all space on dissimilar size disks, the performance, the expandability with new drives added?

Finally, have you settled on a final choice for storage pool management in your Win home server?

Thanks

I have not used Flexraid. I use Drive Bender on my home server.

Thanks for your perspectives. You have highlighted two different storage strategies.

I’m evaluating Storage Spaces myself. There is method to Microsoft’s madness.

1. As you realized, Microsoft moved from file level to the slab level, which is a similar concept to blocks, only larger. A lot of people, like me, are not able to work with file level storage or backup. Microsoft is ~15 years behind what we’ve been buying from third-party vendors because file level redundancy can’t work when you have a lot of data. Working at sub-file level allows for deduplication, which in turn makes getting a daily backup done in a day possible, and allows for replicating data remotely. If you maintain, or want to maintain a lot of data, you cannot achieve that from file-level storage and redundancy. Replicating over WAN, LAN, or to other disks is way faster. E.G. If 20 megs changes in one area of a 20 gig virtual machine, your would move 20 gigs with file-level and a max of 256 megs with Storage Spaces. EMC made a pretty good living for a long time providing redundant storage and backups that way. It’s what makes continuous backups possible, and mounting backups as virtual machines on new hardware, either locally, or at a remote location. It allows us to test things before going live with a point-in-time copy of the real deal in a VM without restoring gigs upon gigs of data. Vista and Server 2008 were the first Microsoft products that RELIABLY worked intelligently at the block level, with the underlying pieces having matured with Shadow Copy in XP. Working at less than file size also enabled versions.

2. File level, as you noted, does have its advantages in many situations. Any time you have many smaller files, like pictures, documents, etc., and/or large files that don’t change, file-based storage may be a better fit, especially if the alternative is working with something that works in chunks that are larger than your average file size. This opens up a pretty big market for third-party products..

ZFS is far more efficient and mature, but the huge advantage Storage Spaces has, is it is Windows. It means you can use your storage server to do about 100 other things. So my thoughts are, if file based is what you need, and Windows is what you want, go third party. If you need something that works in chunks, it’s between Storage Spaces and ZFS. The only dis-qualifier for Storage Spaces is if it can’t keep a GigE pipe full. If it can, then the value proposition becomes the free and very efficient ZFS, vs. the costlier Storage Spaces balanced against being able to use the server for about a thousand different things, easily, and inexpensively that you would not be able to duplicate in the ?NIX world. (BTW, I’m very fluent in UNIX as well.)

Interesting post, thank you! It is quite fair review according to my experience with Windows 8. Thanks.

To all googlers who spend hours searching the net for a solution to the rebalance problem in a parity configuration (Like me ;). At the moment (January 2013) there is no Microsoft tool that does the job (includes PowerShell commands). To ensure that all hard drives can get the same level, you have to copy all the data once, and then delete the originals. That takes a while, but is currently the only way.

Regards

Just stumbled across this while searching for Storage Spaces info. I’ll quickly explain that most of this is right over my head but if you can give a simple answer to my simple requirement: is SS a good way to go for me, I have a box with 6 x 3Tb drives (its got 8 slots so may fill up later) connected by eSata to my PC and I want to use it as a straight backup box, no redundancy/resiliency needed as backing up from another (Drobo, connected by iSCSI) box that has already got that. I started SS and can see the drives there fine so is it suitable for my very basic use? If so can you simply add another disk in future and that will just increase the space available? Grateful for a clear and simple reply, thanks!

Do you know if MS has made any improvement or fix to the problem described above? I need to expand my data pool with more drive so i am doing some research on how to do it. Upon my research, many people are complain about SS just “lose” the pool and storage come up as RAW (asking for reformat). Other people say that you can add just single drive, you need add the same amount of drive.

These info make my very nervous about my current setup. Just wondering if MS has made any improvement or patch to fix the problems.

Thanks for the article.

any chance sp1 will be addressing these shortcmoings?

They’ll probably add the missing features as they go… but for now I’m going to be continuing to use my Linux boxes for storage. MD/LVM have had all of these issues solved for ages: migrating between raid levels, draining a disk so you can remove it, snapshots, etc. I’ve been playing with btrfs for the last few days, and it basically looks like what Storage Spaces should be: rebalancing, changing parity levels. I’ll put all my drives in that, and export out iSCSI devices form Windows machines.

Also suppose I could run the storage Linux machine INSIDE Windows as a VM. Kind of silly, but it would seem to be able to work fine.

Hi all,

Very interesting post, thanks for all the great information.

But like Yi I am also curious if these problems still exist or have been solved?

Regards,

Peter Vrenken

these problems are still here. i am struggling with them at this moment.

There is a solution to the main problems presented on this article…

A lot of the problems that people are experiencing is because when you create a storage pool initially using multiple physical disks, and then create a volume to store your files, Storage Spaces looks at the number of disks in your pool and locks the newly created volume into a strategy that only works for that exact number of disks, and multiples of that number.

Putting aside mirroring and parity (to make the following point clearer) if you create a volume on top of a Storage Space made up of 3 physical disks, that created volume will forever be bound by multiples of 3. As people have observed, that means that larger disks are constrained by the smallest disk in the set of three (the volume wants to spread out equally across the 3). It also means that in order to extend the size of that volume, you will need to add 3 more disks, not 1 or 2. If you just add 1 more disk then the volume you originally created (which is locked into multiples of 3) cannot work with just one or two newly added disks.

The reason this happens is for performance — data is striped equally according to the number of disks detected when the volume was created.

Now, for those of us that do not need the performance of data striping, it is possible to create a volume and set manually the number of “columns” it should be constrained by. Setting the value to 1 (even if you have 3 disks in your pool) will alleviate most of the problems. You will no longer be constrained by the smallest disk in your pool – you can start with uneven disk sizes and it will fill them up vertically instead of horizontally. Similarly, you will no longer be contrained by having to add multiple disks (matching the original pool count) in the future – you can add a single disk at a time.

What you lose is some performance, that should be all. You may even gain some resiliency because it is likely that without striping files will naturally cluster onto individual disks, instead of spread across them (though I do not think you can simply plug one of the disks into another machine and see the files, but low level disk recovery tools may be able to reconstruct part of the set of your family photos — I haven’t tested it though!).

Bottom line: if you are using Storage Spaces as part of a business server with a 100 users, go for striping it will be faster (and you can probably afford to add disks in high multiples anyway). If you are using Storage Spaces in the home for storing infrequently accessed media and photos for a small family of users, consider creating your disk with just 1 column (or creating your volume when only 1 physical disk is plugged in) so it can then scale vertically in the future without the annoying horizontal striping constraints.

You can find out how to make a 1 column volume here if you don’t want to unplug a load of disks:

https://blogs.technet.com/b/askcore/archive/2013/12/18/cannot-extend-simple-virtual-disk-in-windows-server-2012-r2.aspx

It also explains how to see the number of striping columns your existing volume is forever locked into.

Interesting – thanks for sharing!

Of all of these solutions, flexraid is the best. It is far more polish and is the only solution that tries to fully solve the issue of storage pooling with data protection.

FYI, they do have trial versions of their products.

I got an email about their transparent raid software, and I must say it is a delight.

I had been using their snapshot raid software and it great, but that new transparent raid thing is everything I was personally looking for.

Longterm, I think these flexraid guys have the most potential when you look at their approach and solutions. I even read about their upcoming NZFS and how it will be better than ZFS.

Anyway, I am going to spend the whole weekend playing with that transparent raid thing. Cool stuff.

I’m less critical than you (the blog author) of Storage Spaces block/slab orientation to virtual disks and storage pools. In the enterprise SAN market this is how many vendor products work under the hood. Compellent SANs will run concurrent RAID-10 and RAID-5 sets on the same group of physical disks and they move blocks between these varying RAID levels automatically (RAID-10 for writes, 5 for reads) and as soon as a volume replay is taken written data gets moved to RAID 5 which provides negligible read penalty and meaningful capacity benefit. MS’s strategy also provides for tiering, keeping hot blocks on SSD and cold blocks on traditional HDDs (which is also extremely similar to Compellent tiering).

I do agree with the bugs/missing features. An even worse problem, though, is the inability to move virtual disks between storage pools. How are you ever supposed to migrate hardware without a lot of nasty recreation of new virtual disks and application/filesystem level disk moves? This is a problem solved ages ago by SAN vendors who seamlessly and without any downtime allow shelves to be added and removed.

Double parity (aka raid-6) and 3-way mirroring also require more disks than I’d expect. A 3-way mirror should really only require 3 disks, why 5? And most implementations of double parity work with as few as 4 disks, yet storage spaces wants 7.

I also think (like so many other recent MS products), the GUI is greatly underutilized in favor of powershell. Disk arrangement as a concept is something highly graphable and it’s really brain damaged that things like pool column layout can’t be shown visually (or even chosen at all) via the GUI.

Some of these initial setup tweaks (which you’re stuck with if you don’t think about them ahead of time) could be avoided if there was more background intelligence built into the system so it always did the right thing — ie, when adding a disk to the pool, data should be automatically re-balanced across the entire pool membership. For parity drives, this would mean restriping them across additional disks, for mirrored disks, re-arranging which disks they live on for greater flexibility and less contention.

If adding or expanding a virtual disk to the pool requires a rebalance operation, then it should just happen, and the same with removing a physical or virtual disk. Obviously, all within the general limitations of total pool capacity.

Unfortunately and in typical MS fashion, they’re blazing ahead with new features (which look cool on paper) in Server 2016 but not apparently fixing some of these basic usability limitations.

I am looking for any suggestions as I have spent nearly 20+ hours working with MS and reading through blogs and creating/deleting storage pools to simulate scenarios.

Basically I had storage pool that had a “Retired” drive and when working with Microsoft they asked me to use a USB drive and attach to existing storage pool to remove the other.

Adding the USB Drive (2 TB w/ 1.5 existing backup data) sorta worked but ended up having to remove the storage pool and recreate.

However the old pool still shows my old drives + the one “OK” good USB Drive that is part of the pool. But the Storage pool is “Red” and has an error of Inaccessible; check the physical drives section and only allows me to delete the pool.

I do NOT want to delete in fear (and tested) that it will remove my USB backup drive from the storage but also make my data inaccessible (data on the drive prior to being part of the pool. 2-way mirror

I purchased data recovery software and scanned the drives and see some of the data but the name/file structure seems a little off and my preference would be to be able to access my original files on the USB (Videos and pictures mainly) and delete the useless storage pool.

Does anyone know how to access data prior to being part of a storage pool or find a safe way to remove/delete a pool from a storage pool without formatting it and losing the volume? I don’t have anything on the existing pool so basically it is doing nothing/

Got here researching ReFS, via Wikipedia. Anyway, what you describe in the article is how enterprise storage works. Surprise!

What you seem to be saying is, even though you are not aware of it, “Microsoft should just give up this nonsense and port ZFS”.

Since OpenZFS is freeware open source, they could have done it. They could still do it. Then you could mix and match different types of storage vdevs. Want to add a mirror to a RAIDZ? No problem. Want to add a RAIDZ3 vdev to a pool which already has RAIDZ1, RAIDZ2, and several mirrors. Yes, you can. What happens to the data? It is re-striped in the background across all the vdevs.

Don’t have the disks? No problem, just do

zfs set copies=2 your_pool_name

Apple ported ZFS to OS X (although they backpedalled, but there is a 3rd party port). FreeBSD has fully integrated ZFS as a bootable filesystem. Even GNU/Linux garbage has ZFS support via the venerable “ZFS on Linux” project.

What reason could there possibly be for Microsoft not to do the same?

@UNIX admin,

I agree with you, that ZFS is a great filesystem. But it obviously does not meet the requirements the poster of the article above has. It is, like StorageSpaces, block-oriented rather than working on file-level. You also can’t add disks that already contain data without losing their data. And the space restrictions when combining disks of different sizes are quite similar.

And what you say about restriping in the background just isn’t true. While you can easily add new vdevs, the already existing data on the old disks remains there and is never rebalanced onto the new disks. Only newly written data gets striped over all disks, which would force you to rewrite all existing data if you wanted it to get evenly spread over all disks including the new ones.

By the way, I’ve been using ZFS on Linux for more than a year (Linux far longer) with great success and would not call it garbage at all:-)

Regarding the point about rebalancing existing data after a new disk has been added to the pool, please note that, from Windows 10 / Windows Server 2016, you now have the “Optimize-StoragePool” PowerShell command. More details here [1].

[1] https://stebet.net/microsoft-finally-adds-rebalancing-to-storage-spaces/