ClearType Bandwidth Revisited – Testing 32 Bit Color Depth

In an earlier post I wrote about how bandwidth requirements of the RDP protocol are affected by enabling font smoothing (ClearType over RDP version 6) on Windows Server 2008.

Jan, a reader of that article, posted an interesting comment: he had heard that RDP version 6 was optimized for a color depth of 32 bits and asked me to repeat my tests with that setting, which leads to an interesting question: how is font smoothing bandwidth usage affected by the color depth used?

When I prepared the test environment for my initial article on this subject I consciously chose a color depth of 16 bits, mainly because I thought that 16 bits is the most widely used color depth on production systems. I did not, however, think about how different color depths might change the numbers measured.

Setting Up More Tests

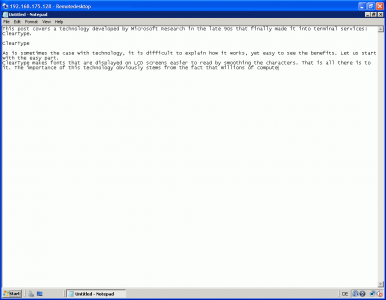

I have done so now. In this article, I present my test results for a color depth of 32 bits. The setup is identical. I used the remote desktop client version 6 on my laptop running Windows XP to connect to a Windows Server 2008 virtualized machine running on the same laptop. An AutoIt script in the test user’s roaming profile startup folder opened notepad, typed a lengthy text at a realistic typing speed and then logged off the session. I measured the bandwidth requirements by monitoring the performance counter “Total Compressed Bytes” of the “Terminal Services Session” object. I performed this twice both with font smoothing enabled and disabled. Before each iteration, I deleted the bitmap cache of the remote desktop client.

The Results – Font Smoothing Disabled…

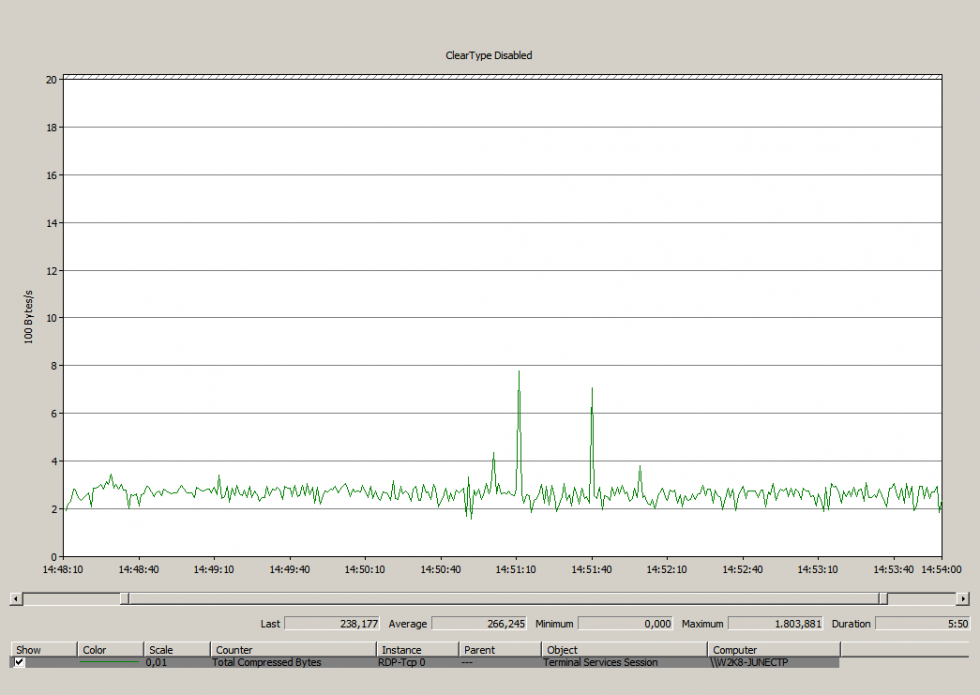

I first tested with font smoothing disabled. Interestingly enough, bandwidth usage does not change when switching from 16 to 32 bits – I measured practically identical numbers: on average 266 Bytes/s while typing the text compared to 264 Bytes/s in 16-bit mode. The reason for the similar numbers becomes clear when you think about how text is displayed without font smoothing: solid black and white pixels only. Since there are no hues on the screen 16 bits are more than enough – in effect, 1 bit would suffice. The following screenshot shows the bandwidth usage during the measurement.

…and Enabled

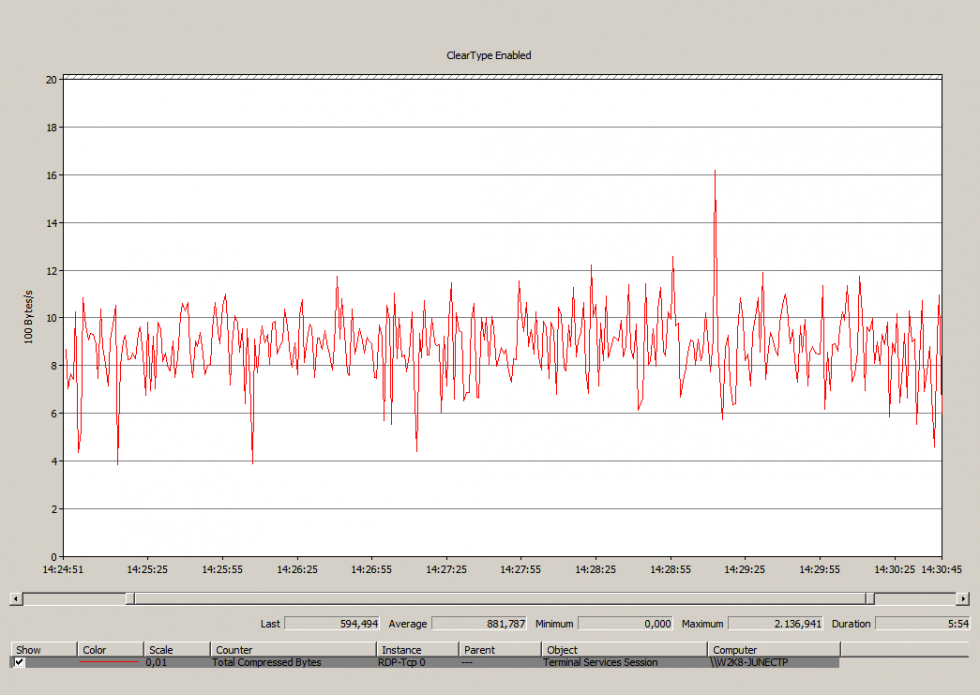

The scene changed dramatically when I switched font smoothing on. At first, I could not believe my eyes and thought I had made a mistake:

Only when I repeated the test and got similar numbers I did believe what I saw: on average 1,753 Bytes/s were transported over the network with font smoothing enabled in 32-bit mode. Obviously, ClearType uses the available hues very effectively in order to render text more smoothly. That is the only explanation I have for the fact that the bandwidth consumed nearly doubles when the color depth is changed from 16 to 32 bits (the factor I measured is 1.986).

Conclusion

Enabling font smoothing dramatically increases the bandwidth required for displaying text over RDP. The higher the color depth, the higher the toll paid for smooth fonts. While at 16 bits per pixel the factor is “only” 3.35, this number almost doubles when the color depth is changed to 32 bits. With 32 bits per pixel enabling font smoothing increases the bandwidth usage when displaying text by 6.6.

While I still think that ClearType is a great technology that is indispensable when working on LCD monitors I recommend that administrators limit the maximum allowed color depth on their Windows Server 2008 terminal servers to 16 bits unless bandwidth is of no concern. The limitation to 16 bits per pixel is, by the way, the default setting in the June 2007 CTP version of Windows Server 2008 I performed these tests on.